Update Process

Antes de partir para o processo de update vamos fazer um review em releases do kubernetes.

Por que é necessário fazer o upgrade frequentemente do kubernetes?

- Support

- Correções de Segurança

- Correções de bugs

- Manter as depedências atualizadas (CVEs)

Manter o kubernetes atualizado é uma tarefa continua que sempre deve ser executada de tempos em tempos.

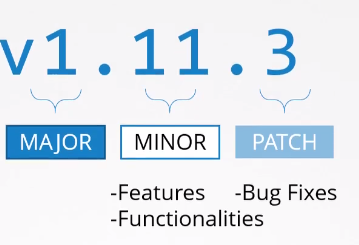

Sempre esteja atualizado no Patch do seu minor. O patch costuma ser lançada pelo menos 1 por mês mas dependendo da gravidade da correção pode ser lançado antes.

Sequencia:

-

Upgrade os componentes do master: apiserver, controller-manager, scheduler, kubelet quando existir.

- 1.1 kubectl drain: para remover os pods do node e evitar que seja schedulado novamente para ele. O drain já faz o cordon automaticamente ficamos responsáveis somente pelo uncordon.

- 1.2 Upgrade

- 1.3 kubectl uncordon: liberar o node para que possa receber pods novamente.

-

Upgrade dos componentes dos workers: kubelet, kubep-proxy. mesma sequencia do master, mas para componentes diferentes.

-

Componentes devem estar na mesmo (minor) versão do apiserver, não quer dizer o mesmo path, mas é bom que esteja. É suportado até uma versão abaixo.

Como fazer com que as aplicações sobrevivam a um upgrade?

- Pod gracePeriod/ Terminating state

- Pod Lifecycle events

- Pod DisruptionBudget

# Estamos na versão 1.30.3

root@cks-master:~# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"30", GitVersion:"v1.30.3", GitCommit:"6fc0a69044f1ac4c13841ec4391224a2df241460", GitTreeState:"clean", BuildDate:"2024-07-16T23:53:15Z", GoVersion:"go1.22.5", Compiler:"gc", Platform:"linux/amd64"}

root@cks-master:~# sudo apt-get update

# No mesmo repositório

root@cks-master:~# apt-cache show kubeadm | grep 1.30

Version: 1.30.4-1.1

Depends: cri-tools (>= 1.30.0)

Filename: amd64/kubeadm_1.30.4-1.1_amd64.deb

Version: 1.30.3-1.1

Depends: cri-tools (>= 1.30.0)

Filename: amd64/kubeadm_1.30.3-1.1_amd64.deb

Version: 1.30.2-1.1

Depends: cri-tools (>= 1.30.0)

Filename: amd64/kubeadm_1.30.2-1.1_amd64.deb

Version: 1.30.1-1.1

Depends: cri-tools (>= 1.30.0)

Filename: amd64/kubeadm_1.30.1-1.1_amd64.deb

MD5sum: 58d27614300af495d0a2b909d017729d

Version: 1.30.0-1.1

Depends: cri-tools (>= 1.30.0)

Filename: amd64/kubeadm_1.30.0-1.1_amd64.deb

Size: 10111304

root@cks-master:~#

# Se quisermos subir para outra versão, precisamos adicionar o repositório.

# A keyring do repositório é a mesma para todos eles, apesar de aqui estar referenciando cada keyring.

root@cks-master:~# cat /etc/apt/sources.list.d/kubernetes.list

deb [signed-by=/etc/apt/keyrings/kubernetes-1-28-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.28/deb/ /

deb [signed-by=/etc/apt/keyrings/kubernetes-1-29-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.29/deb/ /

deb [signed-by=/etc/apt/keyrings/kubernetes-1-30-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /

## Adicione esta linha mudando somente a versão para 1.31

deb [signed-by=/etc/apt/keyrings/kubernetes-1-30-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.31/deb/ /

...

# Só para mostrar

root@cks-master:/etc/apt/keyrings# ls

kubernetes-1-28-apt-keyring.gpg kubernetes-1-30-apt-keyring.gpg

kubernetes-1-29-apt-keyring.gpg

root@cks-master:/etc/apt/keyrings# diff kubernetes-1-28-apt-keyring.gpg kubernetes-1-29-apt-keyring.gpg

root@cks-master:/etc/apt/keyrings# diff kubernetes-1-29-apt-keyring.gpg kubernetes-1-30-apt-keyring.gpg

root@cks-master:/etc/apt/keyrings# sudo apt-get update

Hit:1 http://us-central1.gce.archive.ubuntu.com/ubuntu focal InRelease

Hit:2 http://us-central1.gce.archive.ubuntu.com/ubuntu focal-updates InRelease

Hit:3 http://us-central1.gce.archive.ubuntu.com/ubuntu focal-backports InRelease

Hit:8 http://security.ubuntu.com/ubuntu focal-security InRelease

Hit:4 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.28/deb InRelease

Hit:5 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb InRelease

Hit:6 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.30/deb InRelease

Hit:7 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.31/deb InRelease # <<<<

root@cks-master:/etc/apt/keyrings# apt-cache show kubeadm | grep 1.3

# Temos agora também a 1.31 como opção.

Version: 1.31.0-1.1

Depends: cri-tools (>= 1.30.0)

Filename: amd64/kubeadm_1.31.0-1.1_amd64.deb

Size: 11384872

MD5sum: c35f592acdace32ace9b51bf0fa0d1e3

SHA1: 1a43621c360d41fe4d41e3885acecf4b1a9beacc

Version: 1.30.4-1.1

Depends: cri-tools (>= 1.30.0)

Filename: amd64/kubeadm_1.30.4-1.1_amd64.deb

Size: 10380296

SHA256: 5cb72d00ea4427cc17366147007f2ea2c3da7250b422836c212d6ac3d9c51a17

Version: 1.30.3-1.1

Depends: cri-tools (>= 1.30.0)

Filename: amd64/kubeadm_1.30.3-1.1_amd64.deb

Size: 10381024

Version: 1.30.2-1.1

Depends: cri-tools (>= 1.30.0)

Filename: amd64/kubeadm_1.30.2-1.1_amd64.deb

Size: 10383332

Version: 1.30.1-1.1

Depends: cri-tools (>= 1.30.0)

Filename: amd64/kubeadm_1.30.1-1.1_amd64.deb

Size: 10378004

MD5sum: 58d27614300af495d0a2b909d017729d

Version: 1.30.0-1.1

Depends: cri-tools (>= 1.30.0)

Filename: amd64/kubeadm_1.30.0-1.1_amd64.deb

Size: 10381620

SHA256: 8b37a4db169876432ecc6cc6be2c9502680257d5bcfcd139fd1a38168412853d

MD5sum: 79cadd828764026b106eead11c37a830

Size: 10111304

MD5sum: 3d9cd4b6284efafe8f8d55ab7f214317

SHA1: f6f7a6ae4697ec0a7ba5da4db1d31463b46d13df

SHA256: 8d268ba75badfdb0f60c95dbb0711137fb6a1bc2f212ee212a3aadb1c1cde219

SHA256: 0cd6a9b61a59b0a6592420a425794de2103edb93d49ff8f54b4a93166beb0a07

MD5sum: 38e89f8e371a318842e929902aa5bf18

MD5sum: 422123f5fa5208fb506651610ddd17ec

SHA256: c8f73aaa8415001b03fe0f193317bd8b4ec1541ed28f74eacfbd86213ea6f667

# Quando instalamos geralmente fazemos um hold no pacote para que não seja atualizado no caso de um apt upgrade

root@cks-master:~# apt-mark unhold kubeadm

Canceled hold on kubeadm.

root@cks-master:~# apt-mark hold kubectl kubelet

kubectl was already set on hold.

kubelet was already set on hold.

root@cks-master:~# apt install kubeadm=1.31.0-1.1

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages will be upgraded:

kubeadm

1 upgraded, 0 newly installed, 0 to remove and 9 not upgraded.

Need to get 11.4 MB of archives.

After this operation, 8040 kB of additional disk space will be used.

Get:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.31/deb kubeadm 1.31.0-1.1 [11.4 MB]

Fetched 11.4 MB in 0s (31.0 MB/s)

(Reading database ... 92983 files and directories currently installed.)

Preparing to unpack .../kubeadm_1.31.0-1.1_amd64.deb ...

Unpacking kubeadm (1.31.0-1.1) over (1.30.3-1.1) ...

Setting up kubeadm (1.31.0-1.1) ...

root@cks-master:~#

root@cks-master:~# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"31", GitVersion:"v1.31.0", GitCommit:"9edcffcde5595e8a5b1a35f88c421764e575afce", GitTreeState:"clean", BuildDate:"2024-08-13T07:35:57Z", GoVersion:"go1.22.5", Compiler:"gc", Platform:"linux/amd64"}

Uma vez com o kubeadm na versão que queremos, podemos agora começar a preparar o node.

# Drenando os pods e colocando o node em modo de manutenção.

kubectl drain cks-master --ignore-daemonsets

root@cks-master:~# kubectl drain cks-master --ignore-daemonsets

# Conferindo o que temos para fazer o upgrade

root@cks-master:~# kubeadm upgrade plan

[preflight] Running pre-flight checks.

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[upgrade] Running cluster health checks

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: 1.30.3

[upgrade/versions] kubeadm version: v1.31.0

[upgrade/versions] Target version: v1.31.0

[upgrade/versions] Latest version in the v1.30 series: v1.30.4

# Observe que é possível fazer o upgrade para a mesma versão no ultimo patch e ao final temos o upgrade de minor

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT NODE CURRENT TARGET

kubelet cks-master v1.30.3 v1.30.4

kubelet cks-worker v1.30.3 v1.30.4

Upgrade to the latest version in the v1.30 series:

COMPONENT NODE CURRENT TARGET

kube-apiserver cks-master v1.30.3 v1.30.4

kube-controller-manager cks-master v1.30.3 v1.30.4

kube-scheduler cks-master v1.30.3 v1.30.4

kube-proxy 1.30.3 v1.30.4

CoreDNS v1.11.1 v1.11.1

etcd cks-master 3.5.12-0 3.5.15-0

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.30.4

_____________________________________________________________________

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT NODE CURRENT TARGET

kubelet cks-master v1.30.3 v1.31.0

kubelet cks-worker v1.30.3 v1.31.0

Upgrade to the latest stable version:

COMPONENT NODE CURRENT TARGET

kube-apiserver cks-master v1.30.3 v1.31.0

kube-controller-manager cks-master v1.30.3 v1.31.0

kube-scheduler cks-master v1.30.3 v1.31.0

kube-proxy 1.30.3 v1.31.0

CoreDNS v1.11.1 v1.11.1

etcd cks-master 3.5.12-0 3.5.15-0

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.31.0

_____________________________________________________________________

The table below shows the current state of component configs as understood by this version of kubeadm.

Configs that have a "yes" mark in the "MANUAL UPGRADE REQUIRED" column require manual config upgrade or

resetting to kubeadm defaults before a successful upgrade can be performed. The version to manually

upgrade to is denoted in the "PREFERRED VERSION" column.

API GROUP CURRENT VERSION PREFERRED VERSION MANUAL UPGRADE REQUIRED

kubeproxy.config.k8s.io v1alpha1 v1alpha1 no

kubelet.config.k8s.io v1beta1 v1beta1 no

_____________________________________________________________________

root@cks-master:~# kubeadm upgrade apply v1.31.0

[preflight] Running pre-flight checks.

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[upgrade] Running cluster health checks

[upgrade/version] You have chosen to change the cluster version to "v1.31.0"

[upgrade/versions] Cluster version: v1.30.3

[upgrade/versions] kubeadm version: v1.31.0

[upgrade] Are you sure you want to proceed? [y/N]: y

[upgrade/prepull] Pulling images required for setting up a Kubernetes cluster

[upgrade/prepull] This might take a minute or two, depending on the speed of your internet connection

[upgrade/prepull] You can also perform this action beforehand using 'kubeadm config images pull'

W0826 13:02:36.593754 2105267 checks.go:846] detected that the sandbox image "registry.k8s.io/pause:3.6" of the container runtime is inconsistent with that used by kubeadm.It is recommended to use "registry.k8s.io/pause:3.10" as the CRI sandbox image.

[upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.31.0" (timeout: 5m0s)...

[upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests2208171907"

[upgrade/staticpods] Preparing for "etcd" upgrade

[upgrade/staticpods] Renewing etcd-server certificate

[upgrade/staticpods] Renewing etcd-peer certificate

[upgrade/staticpods] Renewing etcd-healthcheck-client certificate

[upgrade/staticpods] Moving new manifest to "/etc/kubernetes/manifests/etcd.yaml" and backing up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2024-08-26-13-02-49/etcd.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This can take up to 5m0s

[apiclient] Found 1 Pods for label selector component=kube-apiserver

[upgrade/staticpods] Component "kube-apiserver" upgraded successfully!

[upgrade/staticpods] Preparing for "kube-controller-manager" upgrade

[upgrade/staticpods] Renewing controller-manager.conf certificate

[upgrade/staticpods] Moving new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backing up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2024-08-26-13-02-49/kube-controller-manager.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This can take up to 5m0s

[apiclient] Found 1 Pods for label selector component=kube-controller-manager

[upgrade/staticpods] Component "kube-controller-manager" upgraded successfully!

[upgrade/staticpods] Preparing for "kube-scheduler" upgrade

[upgrade/staticpods] Renewing scheduler.conf certificate

[upgrade/staticpods] Moving new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backing up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2024-08-26-13-02-49/kube-scheduler.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This can take up to 5m0s

[apiclient] Found 1 Pods for label selector component=kube-scheduler

[upgrade/staticpods] Component "kube-scheduler" upgraded successfully!

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upgrade] Backing up kubelet config file to /etc/kubernetes/tmp/kubeadm-kubelet-config802365349/config.yaml

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.31.0". Enjoy!

# Observe ao final que agora fazeremos o upgrade do kubelet

[upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you havent already done so.

root@cks-master:~# kubelet --version

Kubernetes v1.30.3

root@cks-master:~# kubectl version

Client Version: v1.30.3

Kustomize Version: v5.0.4-0.20230601165947-6ce0bf390ce3

Server Version: v1.31.0

root@cks-master:~# apt-mark unhold kubelet kubectl

Canceled hold on kubelet.

Canceled hold on kubectl.

root@cks-master:~# apt install kubelet=1.31.0-1.1 kubectl=1.31.0-1.1

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages will be upgraded:

kubectl kubelet

2 upgraded, 0 newly installed, 0 to remove and 8 not upgraded.

Need to get 26.4 MB of archives.

After this operation, 18.3 MB disk space will be freed.

Get:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.31/deb kubectl 1.31.0-1.1 [11.2 MB]

Get:2 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.31/deb kubelet 1.31.0-1.1 [15.2 MB]

Fetched 26.4 MB in 1s (42.7 MB/s)

(Reading database ... 92983 files and directories currently installed.)

Preparing to unpack .../kubectl_1.31.0-1.1_amd64.deb ...

Unpacking kubectl (1.31.0-1.1) over (1.30.3-1.1) ...

Preparing to unpack .../kubelet_1.31.0-1.1_amd64.deb ...

Unpacking kubelet (1.31.0-1.1) over (1.30.3-1.1) ...

Setting up kubectl (1.31.0-1.1) ...

Setting up kubelet (1.31.0-1.1) ...

# Evitando possíveis upgrades

root@cks-master:~# apt-mark hold kubeadm kubelet kubectl

kubeadm set on hold.

kubelet set on hold.

kubectl set on hold.

# É necessário reiniciar o kubelet pois ele ainda estar rodando carregado com a versão anterior.

root@cks-master:~# service kubelet restart

root@cks-master:~# service kubelet status

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; vendor prese>

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Mon 2024-08-26 13:13:09 UTC; 11s ago

Docs: https://kubernetes.io/docs/

Main PID: 2110314 (kubelet)

Tasks: 12 (limit: 4680)

Memory: 26.5M

CGroup: /system.slice/kubelet.service

└─2110314 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/>

# Verificando novamente o kubeadm para analisar o que temos

root@cks-master:~# kubeadm upgrade plan

[preflight] Running pre-flight checks.

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[upgrade] Running cluster health checks

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: 1.31.0

[upgrade/versions] kubeadm version: v1.31.0

[upgrade/versions] Target version: v1.31.0

[upgrade/versions] Latest version in the v1.31 series: v1.31.0

root@cks-master:~# k get nodes

NAME STATUS ROLES AGE VERSION

cks-master Ready,SchedulingDisabled control-plane 10d v1.31.0

cks-worker Ready <none> 10d v1.30.3

root@cks-master:~# kubectl uncordon cks-master

node/cks-master uncordoned

root@cks-master:~# k get nodes

NAME STATUS ROLES AGE VERSION

cks-master Ready control-plane 10d v1.31.0

cks-worker Ready <none> 10d v1.30.3 # ainda estamos na versão anterior.

Agora para o node worker, é seguir praticamente os mesmos passos, mas é um pouco mais simples.

# Ainda no master vamos drenar o node que queremos.

# Deixa um ponto importante aqui para analizarmos o motivo disso acontecer

root@cks-master:~# k drain cks-worker --ignore-daemonsets

node/cks-worker already cordoned

error: unable to drain node "cks-worker" due to error: cannot delete cannot delete Pods that declare no controller (use --force to override): default/app1, default/app2, continuing command...

There are pending nodes to be drained:

cks-worker

cannot delete cannot delete Pods that declare no controller (use --force to override): default/app1, default/app2

# Se não tivessemos o problema acima funcionaria, então vamos forçar.

root@cks-master:~# k drain cks-worker --ignore-daemonsets --delete-emptydir-data --force

node/cks-worker already cordoned

Warning: deleting Pods that declare no controller: default/app1, default/app2; ignoring DaemonSet-managed Pods: kube-system/canal-665nh, kube-system/kube-proxy-w2xzr

evicting pod kube-system/calico-kube-controllers-75bdb5b75d-bnfwm

evicting pod kubernetes-dashboard/kubernetes-dashboard-kong-7696bb8c88-v4zk6

evicting pod kube-system/coredns-7db6d8ff4d-66cj6

evicting pod kube-system/coredns-7db6d8ff4d-7gsth

evicting pod kubernetes-dashboard/kubernetes-dashboard-api-9567bc759-mdvl5

evicting pod kubernetes-dashboard/kubernetes-dashboard-auth-784d848dcb-p686b

evicting pod kubernetes-dashboard/kubernetes-dashboard-metrics-scraper-5485b64c47-9fkpb

evicting pod ingress-nginx/ingress-nginx-admission-create-tmn2q

evicting pod default/app1

evicting pod default/app2

evicting pod ingress-nginx/ingress-nginx-admission-patch-5dv4z

evicting pod ingress-nginx/ingress-nginx-controller-7d4db76476-np7x6

evicting pod kubernetes-dashboard/kubernetes-dashboard-web-84f8d6fff4-t4qgq

pod/ingress-nginx-admission-create-tmn2q evicted

pod/ingress-nginx-admission-patch-5dv4z evicted

I0826 13:19:04.152398 2112378 request.go:700] Waited for 1.000660244s due to client-side throttling, not priority and fairness, request: GET:https://10.128.0.5:6443/api/v1/namespaces/kubernetes-dashboard/pods/kubernetes-dashboard-web-84f8d6fff4-t4qgq

pod/calico-kube-controllers-75bdb5b75d-bnfwm evicted

pod/app2 evicted

pod/kubernetes-dashboard-web-84f8d6fff4-t4qgq evicted

pod/app1 evicted

pod/kubernetes-dashboard-auth-784d848dcb-p686b evicted

pod/kubernetes-dashboard-metrics-scraper-5485b64c47-9fkpb evicted

pod/kubernetes-dashboard-api-9567bc759-mdvl5 evicted

pod/coredns-7db6d8ff4d-66cj6 evicted

pod/coredns-7db6d8ff4d-7gsth evicted

pod/ingress-nginx-controller-7d4db76476-np7x6 evicted

pod/kubernetes-dashboard-kong-7696bb8c88-v4zk6 evicted

node/cks-worker drained

root@cks-master:~# k get nodes

NAME STATUS ROLES AGE VERSION

cks-master Ready control-plane 10d v1.31.0

cks-worker Ready,SchedulingDisabled <none> 10d v1.30.3

# Mudando agora para o worker...

# Adicione o repositório como fizemos no master

root@cks-worker:~# vim /etc/apt/sources.list.d/kubernetes.list

root@cks-worker:~# apt update

Hit:1 http://us-central1.gce.archive.ubuntu.com/ubuntu focal InRelease

Get:2 http://us-central1.gce.archive.ubuntu.com/ubuntu focal-updates InRelease [128 kB]

Hit:3 http://us-central1.gce.archive.ubuntu.com/ubuntu focal-backports InRelease

Hit:5 http://security.ubuntu.com/ubuntu focal-security InRelease

Hit:4 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.28/deb InRelease

Hit:6 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.29/deb InRelease

Hit:7 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.30/deb InRelease

Get:8 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.31/deb InRelease [1186 B]

Get:9 http://us-central1.gce.archive.ubuntu.com/ubuntu focal-updates/main amd64 Packages [3533 kB]

Get:10 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.31/deb Packages [3167 B]

Fetched 3665 kB in 2s (2270 kB/s)

Reading package lists... Done

Building dependency tree

Reading state information... Done

11 packages can be upgraded. Run 'apt list --upgradable' to see them.

root@cks-worker:~# apt-cache show kubeadm | grep 1.31

Version: 1.31.0-1.1

Filename: amd64/kubeadm_1.31.0-1.1_amd64.deb

MD5sum: 3d9cd4b6284efafe8f8d55ab7f214317

SHA1: f6f7a6ae4697ec0a7ba5da4db1d31463b46d13df

MD5sum: 38e89f8e371a318842e929902aa5bf18

root@cks-worker:~# apt-mark unhold kubeadm

Canceled hold on kubeadm.

# Esse passo é só para garantir

root@cks-worker:~# apt-mark hold kubelet kubectl

kubelet was already set on hold.

kubectl was already set on hold.

# Update do kubeadm

root@cks-worker:~# apt install kubeadm=1.31.0-1.1

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages will be upgraded:

kubeadm

1 upgraded, 0 newly installed, 0 to remove and 10 not upgraded.

Need to get 11.4 MB of archives.

After this operation, 8040 kB of additional disk space will be used.

Get:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.31/deb kubeadm 1.31.0-1.1 [11.4 MB]

Fetched 11.4 MB in 2s (5588 kB/s)

(Reading database ... 92983 files and directories currently installed.)

Preparing to unpack .../kubeadm_1.31.0-1.1_amd64.deb ...

Unpacking kubeadm (1.31.0-1.1) over (1.30.3-1.1) ...

Setting up kubeadm (1.31.0-1.1)

...

root@cks-worker:~# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"31", GitVersion:"v1.31.0", GitCommit:"9edcffcde5595e8a5b1a35f88c421764e575afce", GitTreeState:"clean", BuildDate:"2024-08-13T07:35:57Z", GoVersion:"go1.22.5", Compiler:"gc", Platform:"linux/amd64"}

root@cks-worker:~# apt-mark hold kubeadm

kubeadm set on hold.

# Fazendo o upgrade com o kubeadm

root@cks-worker:~# kubeadm upgrade node

[upgrade] Reading configuration from the cluster...

[upgrade] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks

[preflight] Skipping prepull. Not a control plane node.

[upgrade] Skipping phase. Not a control plane node.

[upgrade] Skipping phase. Not a control plane node.

[upgrade] Backing up kubelet config file to /etc/kubernetes/tmp/kubeadm-kubelet-config319963896/config.yaml

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[upgrade] The configuration for this node was successfully updated!

[upgrade] Now you should go ahead and upgrade the kubelet package using your package manager. # <<<

root@cks-worker:~# apt-mark unhold kubelet kubectl

Canceled hold on kubelet.

Canceled hold on kubectl.

root@cks-worker:~# apt install kubelet=1.31.0-1.1 kubectl=1.31.0-1.1 -y

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages will be upgraded:

kubectl kubelet

2 upgraded, 0 newly installed, 0 to remove and 8 not upgraded.

Need to get 26.4 MB of archives.

After this operation, 18.3 MB disk space will be freed.

Get:1 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.31/deb kubectl 1.31.0-1.1 [11.2 MB]

Get:2 https://prod-cdn.packages.k8s.io/repositories/isv:/kubernetes:/core:/stable:/v1.31/deb kubelet 1.31.0-1.1 [15.2 MB]

Fetched 26.4 MB in 1s (42.5 MB/s)

(Reading database ... 92983 files and directories currently installed.)

Preparing to unpack .../kubectl_1.31.0-1.1_amd64.deb ...

Unpacking kubectl (1.31.0-1.1) over (1.30.3-1.1) ...

Preparing to unpack .../kubelet_1.31.0-1.1_amd64.deb ...

Unpacking kubelet (1.31.0-1.1) over (1.30.3-1.1) ...

Setting up kubectl (1.31.0-1.1) ...

Setting up kubelet (1.31.0-1.1) ...

root@cks-worker:~# service kubelet restart

root@cks-worker:~# service kubelet status

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; vendor prese>

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Mon 2024-08-26 13:29:33 UTC; 5s ago

Docs: https://kubernetes.io/docs/

Main PID: 2559575 (kubelet)

Tasks: 13 (limit: 4680)

Memory: 23.6M

CGroup: /system.slice/kubelet.service

└─2559575 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/>

...

root@cks-worker:~# apt-mark hold kubelet kubectl

kubelet set on hold.

kubectl set on hold.

# no master novamente vamos conferir os nodes

root@cks-master:~# k get nodes

NAME STATUS ROLES AGE VERSION

cks-master Ready control-plane 10d v1.31.0

cks-worker Ready,SchedulingDisabled <none> 10d v1.31.0

# liberando o cks-worker para receber pods

root@cks-master:~# k uncordon cks-worker

node/cks-worker uncordoned

root@cks-master:~# k get nodes

NAME STATUS ROLES AGE VERSION

cks-master Ready control-plane 10d v1.31.0

cks-worker Ready <none> 10d v1.31.0

# Por que os pods não estão lá? app1 e app2?

root@cks-master:~# k get pods

No resources found in default namespace.

Respondendo a pergunta acima...

Isso acontece porque os pods app1 e app2 no namespace default não estão gerenciados por um controlador (como um Deployment, StatefulSet, etc.), o que significa que eles não têm um mecanismo automático para serem recriados. Quando você tenta drenar o nó, o Kubernetes evita deletar esses pods para não interromper aplicações críticas que não podem ser facilmente recriadas.

O comando kubectl drain está sendo interrompido porque ele tenta evitar a exclusão acidental de pods não controlados. Para forçar a exclusão desses pods e continuar o processo de dreno, você pode adicionar a flag --force ao comando:

kubectl drain cks-worker --ignore-daemonsets --delete-emptydir-data --force

Use essa opção com cuidado, pois os pods excluídos não serão recriados automaticamente. Se esses pods forem importantes e não puderem ser perdidos, é melhor verificar por que eles não têm um controlador e considerar adicionar um controlador adequado.

Um pod sem um controller não voltará, então sempre tenha um pod com deployment ou algum outro controller.