Docker Swarm - Parte 3

Disponibilidade dos nodes

O que acontece quando algum dos nossos nodes ficam inativo? Pra onde vai os containers que ali estão?

Para isso vamos utilizar novamente o servico de ping que utilizamos para serem escalonados e já vamos escalor para 5.

vagrant@master:~$ docker service create --name pinger --replicas 5 registry.docker-dca.example:5000/alpine ping google.com

#...

vagrant@master:~$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

dd0grakiu2ow pinger replicated 5/5 registry.docker-dca.example:5000/alpine:latest

vagrant@master:~$

vagrant@master:~$ docker service ps pinger --filter desired-state=running

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

slkn7snmuo4l pinger.1 registry.docker-dca.example:5000/alpine:latest registry.docker-dca.example Running Running 2 minutes ago

rwk8i4r1wsvb pinger.2 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 2 minutes ago

6qhufz7xf5kf pinger.3 registry.docker-dca.example:5000/alpine:latest worker2.docker-dca.example Running Running 2 minutes ago

697qkomicb6w pinger.4 registry.docker-dca.example:5000/alpine:latest registry.docker-dca.example Running Running 2 minutes ago

puca1zg6l61n pinger.5 registry.docker-dca.example:5000/alpine:latest worker2.docker-dca.example Running Running 2 minutes ago

Agora vamos imaginar que o worker2 esta com problema e parou. O próprio swarm vai realocar o container para outros nodes. Vamos testar.

❯ vagrant halt worker2

==> worker2: Attempting graceful shutdown of VM...

Agora vamos conferir

vagrant@master:~$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

dd0grakiu2ow pinger replicated 7/5 registry.docker-dca.example:5000/alpine:latest

vagrant@master:~$ docker service ps pinger

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

slkn7snmuo4l pinger.1 registry.docker-dca.example:5000/alpine:latest registry.docker-dca.example Running Running 5 minutes ago

uqbq7n4x61fd \_ pinger.1 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 5 minutes ago "No such image: registry.docke…"

xw7wspjvl6c2 \_ pinger.1 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 6 minutes ago "No such image: registry.docke…"

ivgkwsejvm1n \_ pinger.1 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 6 minutes ago "No such image: registry.docke…"

rwk8i4r1wsvb pinger.2 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 6 minutes ago

neu2uugr2cag pinger.3 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 17 seconds ago

rfy2lisfrz06 \_ pinger.3 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 28 seconds ago "No such image: registry.docke…"

gag78grl9krw \_ pinger.3 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 33 seconds ago "No such image: registry.docke…"

476xl49hc75k \_ pinger.3 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 38 seconds ago "No such image: registry.docke…"

6qhufz7xf5kf \_ pinger.3 registry.docker-dca.example:5000/alpine:latest worker2.docker-dca.example Shutdown Running 5 minutes ago

697qkomicb6w pinger.4 registry.docker-dca.example:5000/alpine:latest registry.docker-dca.example Running Running 6 minutes ago

h5ogmu03mth5 pinger.5 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 17 seconds ago

pnmp0cf22mb4 \_ pinger.5 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 28 seconds ago "No such image: registry.docke…"

p4cmg1dntadm \_ pinger.5 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 33 seconds ago "No such image: registry.docke…"

qmb5ch04n1si \_ pinger.5 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 38 seconds ago "No such image: registry.docke…"

puca1zg6l61n \_ pinger.5 registry.docker-dca.example:5000/alpine:latest worker2.docker-dca.example Shutdown Running 6 minutes ago

Observando o que aconteceu, ele simplesmente agora esta com 7/5 pois ele subiu dois novos, mas o anterior permaneceu ali na história. Vamos subir novamente o worker2.

❯ vagrant up worker2

E conferindo

vagrant@master:~$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

dd0grakiu2ow pinger replicated 5/5 registry.docker-dca.example:5000/alpine:latest

vagrant@master:~$

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

1ppd0q97wdcx pinger.1 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 4 minutes ago

slkn7snmuo4l \_ pinger.1 registry.docker-dca.example:5000/alpine:latest registry.docker-dca.example Shutdown Shutdown 4 minutes ago

uqbq7n4x61fd \_ pinger.1 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 12 minutes ago "No such image: registry.docke…"

xw7wspjvl6c2 \_ pinger.1 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 12 minutes ago "No such image: registry.docke…"

ivgkwsejvm1n \_ pinger.1 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 12 minutes ago "No such image: registry.docke…"

rwk8i4r1wsvb pinger.2 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 12 minutes ago

neu2uugr2cag pinger.3 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 7 minutes ago

rfy2lisfrz06 \_ pinger.3 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 7 minutes ago "No such image: registry.docke…"

gag78grl9krw \_ pinger.3 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 7 minutes ago "No such image: registry.docke…"

476xl49hc75k \_ pinger.3 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 7 minutes ago "No such image: registry.docke…"

6qhufz7xf5kf \_ pinger.3 registry.docker-dca.example:5000/alpine:latest worker2.docker-dca.example Shutdown Shutdown about a minute ago

92yxi5wjb3xn pinger.4 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 4 minutes ago

697qkomicb6w \_ pinger.4 registry.docker-dca.example:5000/alpine:latest registry.docker-dca.example Shutdown Shutdown 4 minutes ago

h5ogmu03mth5 pinger.5 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 7 minutes ago

pnmp0cf22mb4 \_ pinger.5 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 7 minutes ago "No such image: registry.docke…"

p4cmg1dntadm \_ pinger.5 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 7 minutes ago "No such image: registry.docke…"

qmb5ch04n1si \_ pinger.5 registry.docker-dca.example:5000/alpine:latest master.docker-dca.example Shutdown Rejected 7 minutes ago "No such image: registry.docke…"

puca1zg6l61n \_ pinger.5 registry.docker-dca.example:5000/alpine:latest worker2.docker-dca.example Shutdown Shutdown about a minute ago

vagrant@master:~$

Essa não é a maneira certa caso precisa fazer uma manutenção. Para isso precisamos drenar, ou seja, retirar tudo que esta rodando nele e automaticamente o docker swarm já irá realocar para um node disponível.

Vamos drenar tudo que esta no worker2 agora e ver o que acontece

vagrant@master:~$ docker node ls

# todo mundo active e vamos drenar o registry e o worker2

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

jdmwyhbti8s3fnmd17lw79rhw * master.docker-dca.example Ready Active Leader 20.10.17

qvp6um8mstrgrlhhfpjj6khdc registry.docker-dca.example Ready Active 20.10.17

rxgmhpjtky4s6mktwis2jyr99 worker1.docker-dca.example Ready Active 20.10.17

7980uc978wk928ncb6esv3jy3 worker2.docker-dca.example Ready Active 20.10.17

vagrant@master:~$ docker node update worker2.docker-dca.example --availability drain

worker2.docker-dca.example

vagrant@master:~$ docker node update registry.docker-dca.example --availability drain

registry.docker-dca.example

#observe que os nodes estão em drain

vagrant@master:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

jdmwyhbti8s3fnmd17lw79rhw * master.docker-dca.example Ready Active Leader 20.10.17

qvp6um8mstrgrlhhfpjj6khdc registry.docker-dca.example Ready Drain 20.10.17

rxgmhpjtky4s6mktwis2jyr99 worker1.docker-dca.example Ready Active 20.10.17

7980uc978wk928ncb6esv3jy3 worker2.docker-dca.example Ready Drain 20.10.17

Será que o nosso image registry container que estava rodando naquele node caiu?

Details

E o que aconteceu com os containers de ping? Todos passaram a rodar no worker1 como podemos ver.

vagrant@master:~$ docker service ps pinger --filter desired-state=running

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

1ppd0q97wdcx pinger.1 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 21 minutes ago

rwk8i4r1wsvb pinger.2 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 29 minutes ago

neu2uugr2cag pinger.3 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 23 minutes ago

92yxi5wjb3xn pinger.4 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 21 minutes ago

h5ogmu03mth5 pinger.5 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 23 minutes ago

Faça uma inspeção do node que esta em drain

vagrant@master:~$ docker node inspect worker2.docker-dca.example --pretty | grep Availability

Availability: Drain

Vamos reativar as nodes

vagrant@master:~$ docker node update worker2.docker-dca.example --availability active

worker2.docker-dca.example

vagrant@master:~$ docker node update registry.docker-dca.example --availability active

registry.docker-dca.example

vagrant@master:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

jdmwyhbti8s3fnmd17lw79rhw * master.docker-dca.example Ready Active Leader 20.10.17

qvp6um8mstrgrlhhfpjj6khdc registry.docker-dca.example Ready Active 20.10.17

rxgmhpjtky4s6mktwis2jyr99 worker1.docker-dca.example Ready Active 20.10.17

7980uc978wk928ncb6esv3jy3 worker2.docker-dca.example Ready Active 20.10.17

O swarm rebalanceia o service no nodes?

Details

Faça um scale para 10 e veja que agora ele irá levar os nodes ativos em consideração

vagrant@master:~$ docker service scale pinger=10

pinger scaled to 10

overall progress: 10 out of 10 tasks

1/10: running [==================================================>]

2/10: running [==================================================>]

3/10: running [==================================================>]

4/10: running [==================================================>]

5/10: running [==================================================>]

6/10: running [==================================================>]

7/10: running [==================================================>]

8/10: running [==================================================>]

9/10: running [==================================================>]

10/10: running [==================================================>]

verify: Service converged

vagrant@master:~$ docker service ps pinger --filter desired-state=running

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

1ppd0q97wdcx pinger.1 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 32 minutes ago

rwk8i4r1wsvb pinger.2 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 40 minutes ago

neu2uugr2cag pinger.3 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 34 minutes ago

92yxi5wjb3xn pinger.4 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 32 minutes ago

h5ogmu03mth5 pinger.5 registry.docker-dca.example:5000/alpine:latest worker1.docker-dca.example Running Running 34 minutes ago

y73mw5vgyg16 pinger.6 registry.docker-dca.example:5000/alpine:latest registry.docker-dca.example Running Running 19 seconds ago

4jvjg82n23p7 pinger.7 registry.docker-dca.example:5000/alpine:latest registry.docker-dca.example Running Running 9 seconds ago

y41hd554jn8d pinger.8 registry.docker-dca.example:5000/alpine:latest worker2.docker-dca.example Running Running 19 seconds ago

8juifuow9gdl pinger.9 registry.docker-dca.example:5000/alpine:latest worker2.docker-dca.example Running Running 14 seconds ago

v1671qo296zd pinger.10 registry.docker-dca.example:5000/alpine:latest registry.docker-dca.example Running Running 9 seconds ago

vagrant@master:~$

Observe também que o swarm não entregou nada para o worker1, pois ele tenta balancear os recursos no scale.

remova o serviço para os proximos labs

vagrant@master:~$ docker service rm pinger

pinger

Atenção - Desligar uma máquina a força sem fazer o drain pode acarretar em perda de dados durante uma transação

O docker swarm costuma balancear os recursos e não tasks por nodes.

Secrets

Os secrets servem para armazenar recursos sensiveis (confidenciais), tipos senhas, chaves privadas, certificados ssl ou qualquer outro recruso que não devem ser transmitidos pela rede sem criptografia.

São armazenados em blobs (Binary Large OBject) que é uma coleção de dados binários armazenados como uma única entidade.

Para gerenciar os secrets utilizamos o comando docker secret.

O comando

docker secret createnão aceita uma entrada de texto no console, somente via STDIN ou através de arquivo.

vagrant@master:~$ echo "senha123" | docker secret create senha_db -

asfnakvaj9uyfzj4dld3pob6g

# obseve que vc não consegue ver o conteúdo da senha

vagrant@master:~$ docker secret inspect senha_db --pretty

ID: asfnakvaj9uyfzj4dld3pob6g

Name: senha_db

Driver:

Created at: 2022-07-05 16:18:21.940056803 +0000 utc

Updated at: 2022-07-05 16:18:21.940056803 +0000 utc

vagrant@master:~$

O secret deve ser passado por parâmetro para o container que é armazenado no arquivo

/run/secrets/<secret_name>do container.

Vamos executar um container mysql passando a senha como um secret

vagrant@master:~$ docker service create --name mysql_database \

> --publish 3306:3306/tcp \

> --secret senha_db \

> -e MYSQL_ROOT_PASSWORD_FILE=/run/secrets/senha_db \

> registry.docker-dca.example:5000/mysql:5.7

image registry.docker-dca.example:5000/mysql:5.7 could not be accessed on a registry to record

its digest. Each node will access registry.docker-dca.example:5000/mysql:5.7 independently,

possibly leading to different nodes running different

versions of the image.

4i4v1sqgy78w2ekzdscychojc

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

Podemos acessar o banco instalando através de um client. Para isso vamos instalar um client do mariadb e tentar entrar no servidor com a senha para conferir.

vagrant@master:~$ sudo apt-get install mariadb-client -y

# como publicamos a porta qualquer um dos hostnames responderia a soliciatacao mesmo que tivesse em outro node

vagrant@master:~$ mysql -h master.docker-dca.example -u root -p

Enter password:

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 4

Server version: 5.7.38 MySQL Community Server (GPL)

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]> CREATE DATABASE testedb

-> ;

Query OK, 1 row affected (0.002 sec)

MySQL [(none)]> SHOW DATABASES

-> ;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| testedb |

+--------------------+

5 rows in set (0.002 sec)

MySQL [(none)]> EXIT

Bye

Destrua o serviço

vagrant@master:~$ docker service rm mysql_database

Network

Por padrão como mensionado anteriormente o swarm quando iniciado cria uma rede overlay chamada ingress, mas podemos também criar uma outra rede para o scopo do swarm.

vagrant@master:~$ docker network create -d overlay dca

vcql6ccw3n11fixhyh687ng6r

vagrant@master:~$ docker network ls

NETWORK ID NAME DRIVER SCOPE

7d44eda15cc1 bridge bridge local

# Observe que quando vc passa overlay ele já pega o scopo do swarm

vcql6ccw3n11 dca overlay swarm

44b44c41564a docker_gwbridge bridge local

f7e2501a4afc host host local

z9uj2ahzsg28 ingress overlay swarm

c2447a55b6c2 none null local

Essa rede subiu em todos os nodes do swarm?

Vamos subir um serviço nessa rede.

# o publish pode ser especificado qual o target e o published port caso queira ser definido. Senão for exposto ele publicará a mesma porta expose que a imagem define.

vagrant@master:~$ docker service create --name webserver --publish target=80,published=80 --network dca registry.docker-dca.example:5000/nginx

image registry.docker-dca.example:5000/nginx:latest could not be accessed on a registry to record

its digest. Each node will access registry.docker-dca.example:5000/nginx:latest independently,

possibly leading to different nodes running different

versions of the image.

z071ovcmnnhwpja74insryi0c

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

#observe que ele subiu no worker2 mas criamos network overlay no master

vagrant@master:~$ docker service ps webserver

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

azpyvzjvfb2r webserver.1 registry.docker-dca.example:5000/nginx:latest worker2.docker-dca.example Running Running 30 seconds ago

vagrant@master:~$

A network dca overlay que criamos esta presente nos outros nodes? Sim, quando criamos uma rede overlay o swarm automaticamente já cria essa rede em todos os nodes. Vamos conferir!

❯

[vagrant@worker2 ~]$ docker network ls

NETWORK ID NAME DRIVER SCOPE

9dc63665ea48 bridge bridge local

# esta aqui

vcql6ccw3n11 dca overlay swarm

258d442717bf docker_gwbridge bridge local

f988e2b4b3d5 host host local

z9uj2ahzsg28 ingress overlay swarm

8c368d1f1a41 none null local

#e o container esta rodando?

[vagrant@worker2 ~]$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4cf386c682fc registry.docker-dca.example:5000/nginx:latest "/docker-entrypoint.…" 3 minutes ago Up 3 minutes 80/tcp webserver.1.azpyvzjvfb2r2tzexu24pjhn3

[vagrant@worker2 ~]$

Se escalarmos o container podemos observar que ele consegue.

vagrant@master:~$ docker service ps webserver --filter desired-state=running

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

azpyvzjvfb2r webserver.1 registry.docker-dca.example:5000/nginx:latest worker2.docker-dca.example Running Running 10 minutes ago

n3f6egq78zeq webserver.2 registry.docker-dca.example:5000/nginx:latest worker1.docker-dca.example Running Running 25 seconds ago

ijvv9lbzb6xg webserver.3 registry.docker-dca.example:5000/nginx:latest registry.docker-dca.example Running Running 45 seconds ago

vagrant@master:~$

Poderíamos criar uma rede overlay no worker2 que não é manager do cluster?

Details

E como fica a comunicação entre as redes? Por mais que exista mais de uma rede overlay ele consegue resolver somente apontando para os hostnames.

Volumes

Se subirmos um volume onde ele ficará caso um container seja escalado. Voltando no estudo de volumes vamos instalar o plugin de nfs, pois destruimos as máquinas anteriormente.

É necessário instalar o plugin em todas as máquinas?

Stack

O stack é o compose do swarm. Com ele podemos automatizar a criação de vários services ao mesmo tempo com um único manifesto. Uma curiosidade é que não tem como criar um build por dentro do stack como fazemos no compose, pois não existe o parâmetro build somente o image. É necessário passar uma imagem.

No ambiente de produção deployar services um a um não é a maneira ideal. A melhor prática é ter um arquivo com todo o ambiente definido.

Quando executamos o Docker em modo swarm, podemos utilizar o comando docker stack deploy

Utilizar o comando docker stack deploy para fazer o deploy de uma aplicação completa no swarm. É necessário passar o arquivo de compose no comando deploy. Esse arquivo não precisa ter um nome específico, mas precisar ser um arquivo yaml.

Para trabalharmos com stacks, precisamos utilizar o arquivo compose com sua versão 3 ou superior.

Vamos fazer o deploy do webserver que utilizamos anteriormente, mas com o stack.

Primeiramente crie uma pasta para armazenarmos arquivos do stack e o arquivo webserver.yml onde iremos definir nosso compose.

vagrant@master:~$ mkdir -p stack

vagrant@master:~$ cd stack/

vagrant@master:~/stack$ cat << EOF > webserver.yaml

version: '3.9'

services:

webserver:

image: registry.docker-dca.example:5000/nginx

hostname: webserver

ports:

- 80:80

EOF

# Observe que assim como no compose ele criou uma network

# --compose-file poderia ser -c

vagrant@master:~/stack$ docker stack deploy --compose-file webserver.yaml myproject

Creating network myproject_default

Creating service myproject_webserver

# Observe também que ele coloca o nome do projeto seguido do nome do serviço

vagrant@master:~/stack$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

7c47dz1amos0 myproject_webserver replicated 1/1 registry.docker-dca.example:5000/nginx:latest *:80->80/tcp

vagrant@master:~/stack$

Vamos conferir os subcomandos do stack

# Para listar os deployements

vagrant@master:~/stack$ docker stack ls

NAME SERVICES ORCHESTRATOR

myproject 1 Swarm

vagrant@master:~/stack$

# Para conferir os services de um deployment

vagrant@master:~/stack$ docker stack services myproject

ID NAME MODE REPLICAS IMAGE PORTS

7c47dz1amos0 myproject_webserver replicated 1/1 registry.docker-dca.example:5000/nginx:latest *:80->80/tcp

vagrant@master:~/stack$

# Ou um ps para mostrar os containers que estão sendo executados

vagrant@master:~/stack$ docker stack ps myproject

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

zmroxabl08o0 myproject_webserver.1 registry.docker-dca.example:5000/nginx:latest worker2.docker-dca.example Running Running 4 minutes ago

p17l8q2q1kiu \_ myproject_webserver.1 registry.docker-dca.example:5000/nginx:latest master.docker-dca.example Shutdown Rejected 4 minutes ago "No such image: registry.docke…"

vagrant@master:~/stack$

Agora vamos modificar o arquivo alguns parâmetros do stack.

Não é necessário remover um deploy para subir outro, ele simplesmente atualiza o já existente.

O Campo deployment defini as estragégias do nosso deploy, como replicas, politicas, etc.

vagrant@master:~/stack$

cat << EOF > webserver.yaml

version: '3.9'

services:

webserver:

image: registry.docker-dca.example:5000/nginx

hostname: webserver

ports:

- 80:80

deploy:

replicas: 5

restart_policy:

condition: on-failure

EOF

vagrant@master:~/stack$ docker stack deploy --compose-file webserver.yaml myproject

# verificando se replicas funcinou

vagrant@master:~/stack$ docker stack services myproject

ID NAME MODE REPLICAS IMAGE PORTS

7c47dz1amos0 myproject_webserver replicated 5/5 registry.docker-dca.example:5000/nginx:latest *:80->80/tcp

vagrant@master:~/stack$

# Observe que ele não faz deploy no master por padrão.

vagrant@master:~/stack$ docker stack ps myproject --filter desired-state=running

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

v2934l9iqick myproject_webserver.1 registry.docker-dca.example:5000/nginx:latest registry.docker-dca.example Running Running 2 minutes ago

ycj0gyhmder6 myproject_webserver.2 registry.docker-dca.example:5000/nginx:latest registry.docker-dca.example Running Running 2 minutes ago

md31kkccla2d myproject_webserver.3 registry.docker-dca.example:5000/nginx:latest worker1.docker-dca.example Running Running 2 minutes ago

22wwst0ohbv5 myproject_webserver.4 registry.docker-dca.example:5000/nginx:latest worker2.docker-dca.example Running Running 2 minutes ago

vzdo661usdf6 myproject_webserver.5 registry.docker-dca.example:5000/nginx:latest worker2.docker-dca.example Running Running 2 minutes ago

vagrant@master:~/stack$

Faça o teste novamente tirando o replicas para o global e ver como funciona esse método de deploy

vagrant@master:~/stack$ cat << EOF > webserver.yaml

version: '3.9'

services:

webserver:

image: registry.docker-dca.example:5000/nginx

hostname: webserver

ports:

- 80:80

deploy:

mode: global

restart_policy:

condition: on-failure

EOF

# Deixei isso para mostrar que não é possível mudar o tipo do service a quente. É necessaŕio remover o service e fazer o deploy novamente.

vagrant@master:~/stack$ docker stack deploy --compose-file webserver.yaml myproject

Updating service myproject_webserver (id: 7c47dz1amos0u01bxik141yrx)

failed to update service myproject_webserver: Error response from daemon: rpc error: code = Unimplemented desc = service mode change is not allowed

vagrant@master:~/stack$

vagrant@master:~/stack$ docker stack rm myproject

vagrant@master:~/stack$ docker stack services myproject

ID NAME MODE REPLICAS IMAGE PORTS

286ebe59na9g myproject_webserver global 4/4 registry.docker-dca.example:5000/nginx:latest *:80->80/tcp

# Observe que criou um em cada node incluindo o master

vagrant@master:~/stack$ docker service ps myproject_webserver

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

mby8i5ycafmo myproject_webserver.7980uc978wk928ncb6esv3jy3 registry.docker-dca.example:5000/nginx:latest worker2.docker-dca.example Running Running about a minute ago

qgz3s92kyd46 myproject_webserver.jdmwyhbti8s3fnmd17lw79rhw registry.docker-dca.example:5000/nginx:latest master.docker-dca.example Running Running about a minute ago

zay6j3qxum2y myproject_webserver.qvp6um8mstrgrlhhfpjj6khdc registry.docker-dca.example:5000/nginx:latest registry.docker-dca.example Running Running about a minute ago

q04akpyw6jzh myproject_webserver.rxgmhpjtky4s6mktwis2jyr99 registry.docker-dca.example:5000/nginx:latest worker1.docker-dca.example Running Running about a minute ago

Removendo...

vagrant@master:~/stack$ docker stack rm myproject

Removing service myproject_webserver

Removing network myproject_default

Constraints e Labels

https://docs.docker.com/engine/swarm/services/#placement-constraints

Constraints são restrições para quais nodes dever ser deployada nossas tasks. Devem ficar geralmente dentro do bloco placement.

https://docs.docker.com/config/labels-custom-metadata/

Label é um metadado de rótulo que podemos para dar match afim agrupar na hora de fazer um filtro, mas também usada pelos constraints.

Não é o assunto, mas LABEL pode ser adicionado em imagens, direto em um container, em um volume, em network , em nodes e em services. Para dar match somente pode ser usado

== ou !=. Ou é ou não é.

Nesse exemplo vamos pedir somente nos nodes manager.

vagrant@master:~/stack$ cat << EOF > webserver.yaml

version: '3.9'

services:

webserver:

image: registry.docker-dca.example:5000/nginx

hostname: webserver

ports:

- 80:80

deploy:

mode: replicated

replicas: 5

placement:

constraints:

- node.role==manager # é manager ou worker

## outros exemplos: node.id node.hostname

restart_policy:

condition: on-failure

EOF

vagrant@master:~/stack$ docker stack deploy --compose-file webserver.yaml myproject

Updating service myproject_webserver (id: acjc23mh4gsz8r54gn3a7bvzg)

vagrant@master:~/stack$

#observe que só entrou no master

vagrant@master:~/stack$ docker stack ps myproject --filter desired-state=running

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

6gm3u7nwm24m myproject_webserver.1 registry.docker-dca.example:5000/nginx:latest master.docker-dca.example Running Running 46 seconds ago

u45vc7u41dxn myproject_webserver.2 registry.docker-dca.example:5000/nginx:latest master.docker-dca.example Running Running 45 seconds ago

0qywj4djqfxs myproject_webserver.3 registry.docker-dca.example:5000/nginx:latest master.docker-dca.example Running Running 44 seconds ago

p9848shciu3m myproject_webserver.4 registry.docker-dca.example:5000/nginx:latest master.docker-dca.example Running Running 43 seconds ago

i1s5wkozo2uy myproject_webserver.5 registry.docker-dca.example:5000/nginx:latest master.docker-dca.example Running Running 42 seconds ago

vagrant@master:~/stack$

Vamos adicionar algumas labels de tipo de disco, region e operational system nos nodes

vagrant@master:~/stack$ docker node update --label-add disk=ssd worker1.docker-dca.example

worker1.docker-dca.example

vagrant@master:~/stack$ docker node update --label-add region=us-east-1 worker1.docker-dca.example

worker1.docker-dca.example

vagrant@master:~/stack$ docker node update --label-add disk=hdd worker2.docker-dca.example

worker2.docker-dca.example

vagrant@master:~/stack$ docker node update --label-add region=us-east-1 worker2.docker-dca.example

worker2.docker-dca.example

vagrant@master:~/stack$ docker node update --label-add region=us-east-2 registry.docker-dca.example

registry.docker-dca.example

vagrant@master:~/stack$ docker node update --label-add os=ubuntu worker1.docker-dca.example

worker1.docker-dca.example

vagrant@master:~/stack$ docker node update --label-add os=centos worker2.docker-dca.example

worker2.docker-dca.example

# ou ganhar tempo fazendo isso

vagrant@master:~/stack$ docker node update --label-add os=ubuntu --label-add disk=ssd --label-add region=us-east-1 master.docker-dca.example

master.docker-dca.example

Fazendo um inspect em um desses container para ver a label podemos ver labels por ali.

vagrant@master:~/stack$ docker node inspect master.docker-dca.example --pretty

ID: jdmwyhbti8s3fnmd17lw79rhw

Labels:

- disk=ssd

- os=ubuntu

- region=us-east-1

Hostname: master.docker-dca.example

Joined at: 2022-07-01 01:56:16.645464567 +0000 utc

Status:

State: Ready

Availability: Active

Address: 10.10.10.100

Manager Status:

Address: 10.10.10.100:2377

Raft Status: Reachable

Leader: Yes

Platform:

Operating System: linux

Architecture: x86_64

Resources:

CPUs: 2

Memory: 1.937GiB

Plugins:

Log: awslogs, fluentd, gcplogs, gelf, journald, json-file, local, logentries, splunk, syslog

Network: bridge, host, ipvlan, macvlan, null, overlay

Volume: local, trajano/nfs-volume-plugin:latest

Engine Version: 20.10.17

TLS Info:

TrustRoot:

-----BEGIN CERTIFICATE-----

MIIBaTCCARCgAwIBAgIUG+odVPoSYT2+BmLkq3wInnasx9owCgYIKoZIzj0EAwIw

EzERMA8GA1UEAxMIc3dhcm0tY2EwHhcNMjIwNzAxMDE1MTAwWhcNNDIwNjI2MDE1

MTAwWjATMREwDwYDVQQDEwhzd2FybS1jYTBZMBMGByqGSM49AgEGCCqGSM49AwEH

A0IABMYd+K9Z9i7NLOBRzUOYL4vKJ/jaJascVXJYKSafMbBwhr/WOgcZ6NlPBIMG

zsdcxTP9zIYggeiSGYmA7WIMZ3ujQjBAMA4GA1UdDwEB/wQEAwIBBjAPBgNVHRMB

Af8EBTADAQH/MB0GA1UdDgQWBBQbh3AGVtxzbeT6zxp0QghuuotPcjAKBggqhkjO

PQQDAgNHADBEAiAVHH83vBU5qb/sFbF8DBvFyWDHjFsV649/BAVWcAyncQIgFKcU

/M/pAK7YI5bdgKz1RA57XzUdVMVvD+ErJGSgnT0=

-----END CERTIFICATE-----

Issuer Subject: MBMxETAPBgNVBAMTCHN3YXJtLWNh

Issuer Public Key: MFkwEwYHKoZIzj0CAQYIKoZIzj0DAQcDQgAExh34r1n2Ls0s4FHNQ5gvi8on+NolqxxVclgpJp8xsHCGv9Y6Bxno2U8EgwbOx1zFM/3MhiCB6JIZiYDtYgxnew==

Agora utilizando essas labels vamos refazer nosso stack.

vagrant@master:~/stack$ cat << EOF > webserver.yaml

version: '3.9'

services:

webserver:

image: registry.docker-dca.example:5000/nginx

hostname: webserver

ports:

- 80:80

deploy:

mode: replicated

replicas: 5

placement:

constraints:

- node.labels.disk==ssd

- node.labels.os==ubuntu

- node.labels.region==us-east-1

- node.role==worker

restart_policy:

condition: on-failure

EOF

Se aplicarmos novamente esse stack ele irá subistituir o que já tem lá? Não. Lembre-se de que o estado do service já foi atingido logo se ele tem 5 containers rodando ele só aplicará a novas tasks. Para dar certo vamos remover o que tem lá.

Veja que ele critou tudo no worker1 pois é o unico que atendeu todas as constraints.

agrant@master:~/stack$ docker stack rm myproject

Removing service myproject_webserver

Removing network myproject_default

vagrant@master:~/stack$ docker stack deploy --compose-file webserver.yaml myproject

Creating network myproject_default

Creating service myproject_webserver

vagrant@master:~/stack$ docker stack ps myproject --filter desired-state=running

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

usyfq0koorpe myproject_webserver.1 registry.docker-dca.example:5000/nginx:latest worker1.docker-dca.example Running Running less than a second ago

s5myway7hhwl myproject_webserver.2 registry.docker-dca.example:5000/nginx:latest worker1.docker-dca.example Running Running less than a second ago

ivabd1hijfxs myproject_webserver.3 registry.docker-dca.example:5000/nginx:latest worker1.docker-dca.example Running Running less than a second ago

ifn1imn3d3ip myproject_webserver.4 registry.docker-dca.example:5000/nginx:latest worker1.docker-dca.example Running Running less than a second ago

knyi5l2xmery myproject_webserver.5 registry.docker-dca.example:5000/nginx:latest worker1.docker-dca.example Running Running less than a second ago

vagrant@master:~/stack$

Remova o stack

vagrant@master:~/stack$ docker stack rm myproject

Removing service myproject_webserver

Removing network myproject_default

Agora vamos aprofundar mais criando uma stack maior

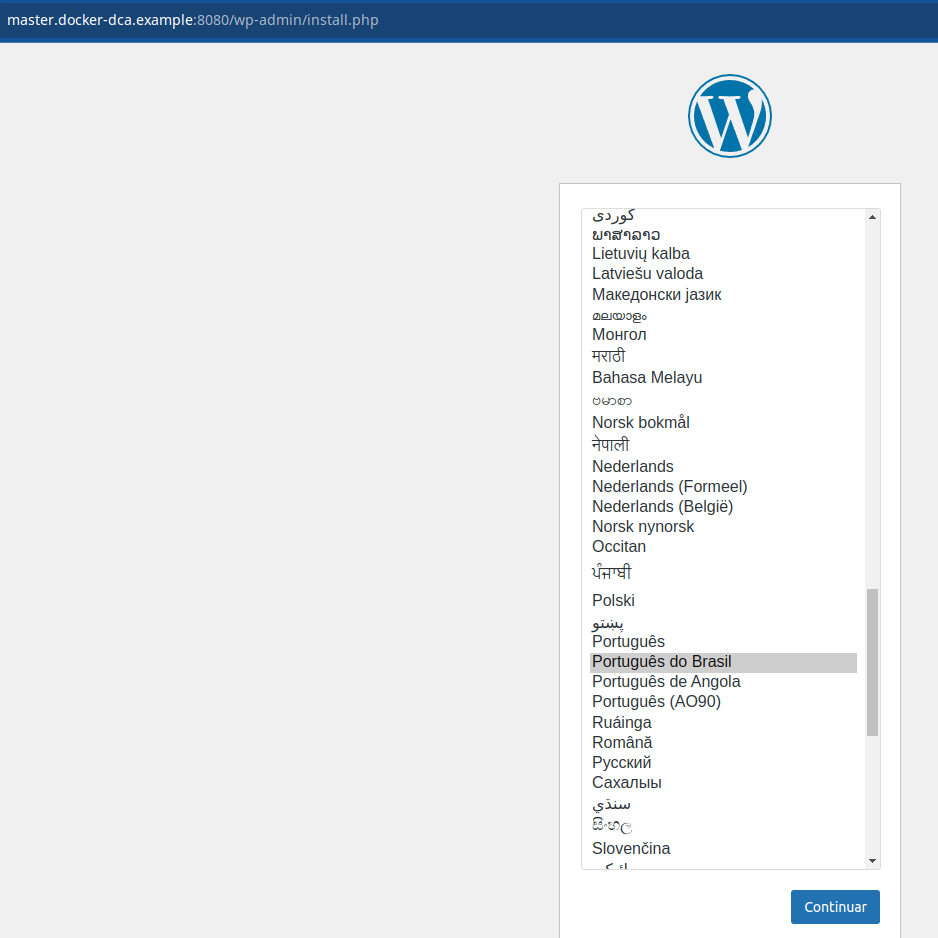

vagrant@master:~/stack$ cat << EOF > webserver.yaml

version: '3.9'

volumes:

mysql_db:

networks:

wordpress_net:

# Esse secret já tinha sido declarado anteriormente e foi reaproveitado aqui para mostrar a funcionalidade

secrets:

senha_db:

external: true # external quer dizer que não foi criado aquicd

services:

wordpress:

image: registry.docker-dca.example:5000/wordpress

ports:

- 8080:80

environment:

WORDPRESS_DB_HOST: db

WORDPRESS_DB_USER: wpuser

WORDPRESS_DB_PASSWORD_FILE: /run/secrets/senha_db

WORDPRESS_DB_NAME: wordpress

networks:

- wordpress_net

secrets:

- senha_db

deploy:

mode: replicated

replicas: 5

placement:

constraints:

- node.role==worker

restart_policy:

condition: on-failure

depends_on:

- db

db:

image: registry.docker-dca.example:5000/mysql:5.7

volumes:

- mysql_db:/var/lib/mysql

secrets:

- senha_db

networks:

- wordpress_net

environment:

MYSQL_DATABASE: wordpress

MYSQL_USER: wpuser

MYSQL_PASSWORD_FILE: /run/secrets/senha_db

MYSQL_RANDOM_ROOT_PASSWORD: '1'

deploy:

replicas: 1

placement:

constraints:

- node.role==manager

restart_policy:

condition: on-failure

EOF

docker stack deploy wordpress --compose-file webserver.yaml

Vamos fazer o deploy da stack e veja que ele fez o update.

vagrant@master:~/stack$ docker stack deploy wordpress --compose-file webserver.yaml

Updating service wordpress_db (id: z91i4piqiua4mufapobni2x3d)

Updating service wordpress_wordpress (id: lsp4152rvzpwxbvzyoq7k3g2g)

docker stack services wordpress

docker stack ps wordpress

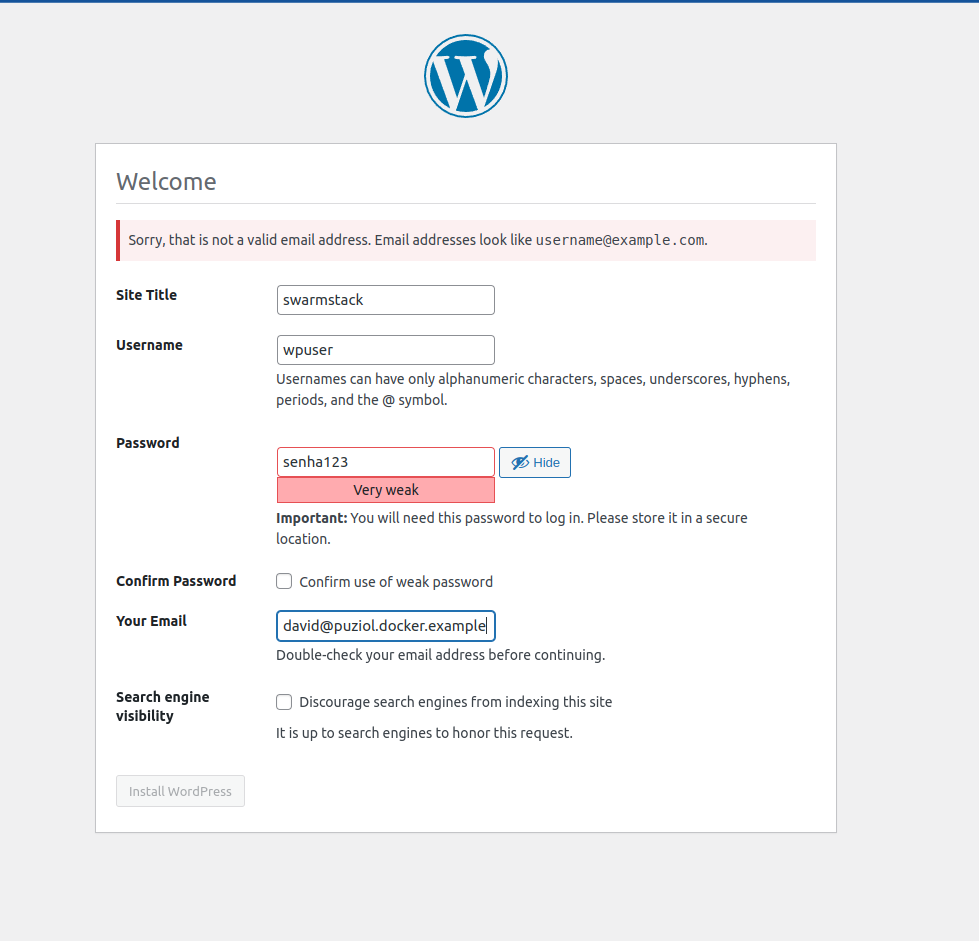

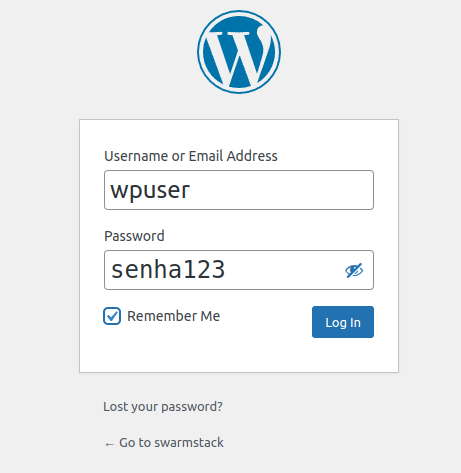

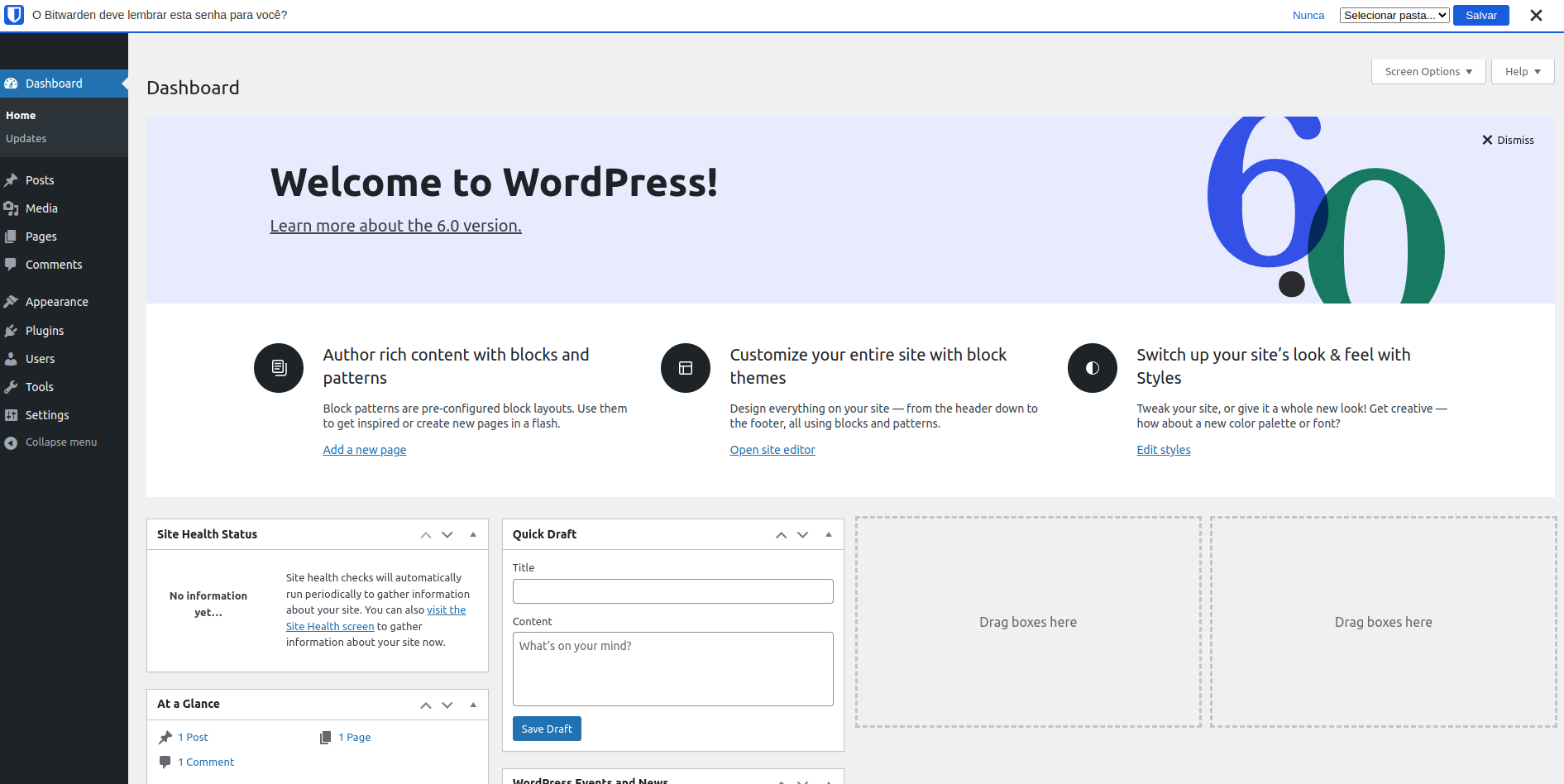

Acesse o navegador e configure a webpage

Note que podemos acessar o site de qualquer endereço do cluster, uma vez que temos um vip configurado.

http://master.docker-dca.example:8080/

Gerenciado Recursos

Podemos também gerenciar um limite/reserva de recursos para o container através do parâmetro resources no compose. No bloco de deploy do wordpress (isso é só uma fatia do bloco) poderíamos incluir alguns parâmetros a mais.

vagrant@master:~/stack$ cat << EOF > webserver.yaml

version: '3.9'

volumes:

mysql_db:

networks:

wordpress_net:

# Esse secret já tinha sido declarado anteriormente e foi reaproveitado aqui para mostrar a funcionalidade

secrets:

senha_db:

external: true # external quer dizer que não foi criado aquicd

services:

wordpress:

image: registry.docker-dca.example:5000/wordpress

ports:

- 8080:80

environment:

WORDPRESS_DB_HOST: db

WORDPRESS_DB_USER: wpuser

WORDPRESS_DB_PASSWORD_FILE: /run/secrets/senha_db

WORDPRESS_DB_NAME: wordpress

networks:

- wordpress_net

secrets:

- senha_db

deploy:

mode: replicated

replicas: 5

placement:

constraints:

- node.role==worker

restart_policy:

condition: on-failure

########## Parametros novos ##########

resources:

limits: #(Valores máximos)

cpus: "1"

memory: 60M # em megas

reservations: #(Valores Garantidos)

cpus: "0.5" #isso é 50% de uma cpu

memory: 30M

######################################

depends_on:

- db

db:

image: registry.docker-dca.example:5000/mysql:5.7

volumes:

- mysql_db:/var/lib/mysql

secrets:

- senha_db

networks:

- wordpress_net

environment:

MYSQL_DATABASE: wordpress

MYSQL_USER: wpuser

MYSQL_PASSWORD_FILE: /run/secrets/senha_db

MYSQL_RANDOM_ROOT_PASSWORD: '1'

deploy:

replicas: 1

placement:

constraints:

- node.role==manager

restart_policy:

condition: on-failure

EOF

Isso quer dizer que ele separar garantidamente 0.5 cpu e 30M de ram, ou seja, o container sempre tem isso disponível para ele. Se precisar aumentar vai chegar até no máximo 1 cpu e 60M de ram.

Se todos os containers chegarem no máximo mas só tem garantido o mínimo o que acontece?

Vamos fazer o deploy novamente

vagrant@master:~/stack$ docker stack services wordpress

ID NAME MODE REPLICAS IMAGE PORTS

z91i4piqiua4 wordpress_db replicated 1/1 registry.docker-dca.example:5000/mysql:5.7

lsp4152rvzpw wordpress_wordpress replicated 5/5 registry.docker-dca.example:5000/wordpress:latest *:8080->80/tcp

vagrant@master:~/stack$ docker stack ps wordpress --filter desired-state=running

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

kk1j0x76zo8d wordpress_db.1 registry.docker-dca.example:5000/mysql:5.7 master.docker-dca.example Running Running 31 hours ago

odd02cbzelvj wordpress_wordpress.1 registry.docker-dca.example:5000/wordpress:latest worker2.docker-dca.example Running Running about a minute ago

wzaop3wrnrk6 wordpress_wordpress.2 registry.docker-dca.example:5000/wordpress:latest worker1.docker-dca.example Running Running about a minute ago

k6mjvcote05s wordpress_wordpress.3 registry.docker-dca.example:5000/wordpress:latest worker2.docker-dca.example Running Running about a minute ago

muvxhf3dzyxl wordpress_wordpress.4 registry.docker-dca.example:5000/wordpress:latest worker1.docker-dca.example Running Running about a minute ago

u2je2a8euo7s wordpress_wordpress.5 registry.docker-dca.example:5000/wordpress:latest registry.docker-dca.example Running Running about a minute ago

vagrant@master:~/stack$

# Conferindo o service...

vagrant@master:~/stack$ docker service inspect wordpress_wordpress --pretty

ID: lsp4152rvzpwxbvzyoq7k3g2g

Name: wordpress_wordpress

Labels:

com.docker.stack.image=registry.docker-dca.example:5000/wordpress

com.docker.stack.namespace=wordpress

Service Mode: Replicated

Replicas: 5

UpdateStatus:

State: completed

Started: 3 minutes ago

Completed: 3 minutes ago

Message: update completed

Placement:

Constraints: [node.role==worker]

UpdateConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Update order: stop-first

RollbackConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Rollback order: stop-first

ContainerSpec:

Image: registry.docker-dca.example:5000/wordpress:latest@sha256:b57bf41505b6eb494a59034820f5bd3517bbedcddb35c3ad1be950bfc96c2164

Env: WORDPRESS_DB_HOST=db WORDPRESS_DB_NAME=wordpress WORDPRESS_DB_PASSWORD_FILE=/run/secrets/senha_db WORDPRESS_DB_USER=wpuser

Secrets:

Target: senha_db

Source: senha_db

Resources:

## aqui os resources que definimos

Reservations:

CPU: 0.5

Memory: 30MiB

Limits:

CPU: 1

Memory: 60MiB

Networks: wordpress_wordpress_net

Endpoint Mode: vip

Ports:

PublishedPort = 8080

Protocol = tcp

TargetPort = 80

PublishMode = ingress

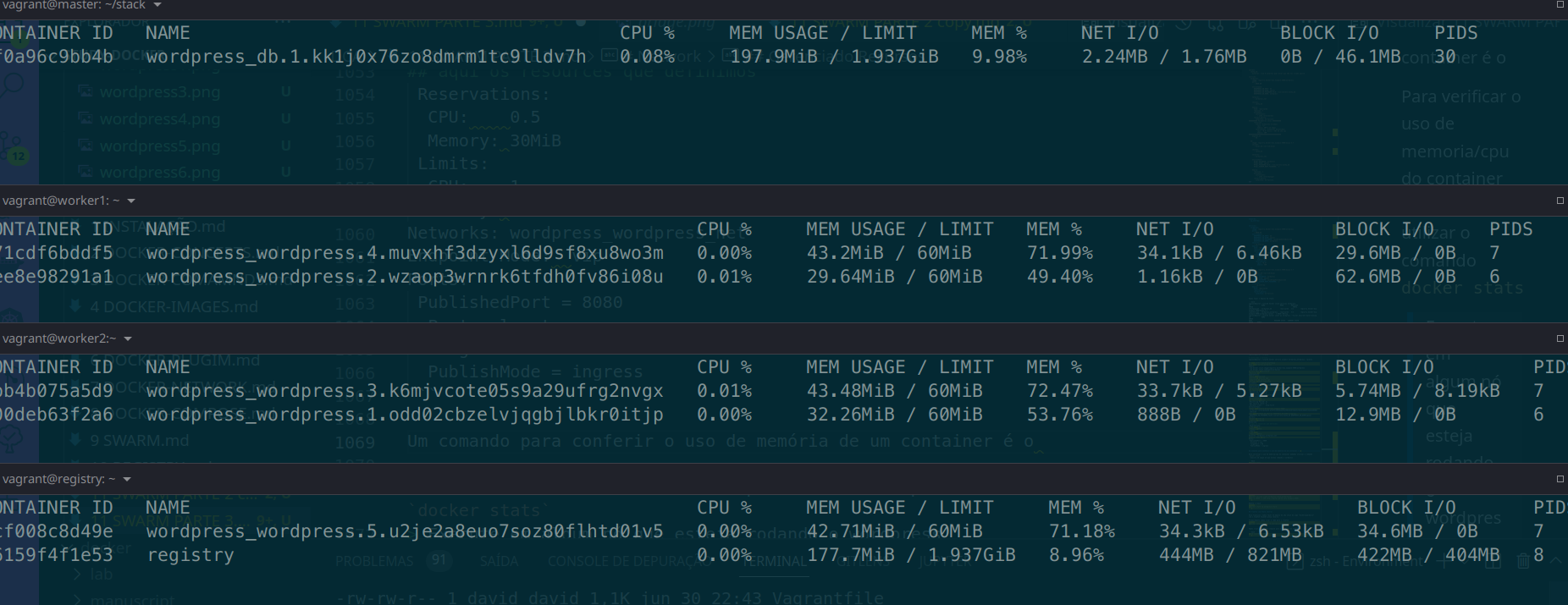

Um comando para conferir o uso de memória de um container é o docker stats.

O docker stats deve ser usado em cada um dos nodes para conferir os seus container. Com esse comando não podemos diretamente do master saber isso.

Faça um docker stats em cada um dos nodes e confira

É possível do saber saber o uso de memória de cada container de outros nodes? Direto pelo swarm não. Para isso é usado outros recursos como veremos mais pra frente. Lembra do global?

Vamos instalar um stresser na máquina master que não tem wordpress e estressar as outras.

Vamos instalar na máquina master o apache benchmark para fazer um stress test no container.

sudo apt-get install apache2-utils -y

Execute o apache benchmark e acompanhe o uso de cpu/memoria do container nos containers que estão rodando o workepress, pois passamos a porta 8080 entao vai pro worker1 e 2.

ab -n 10000 -c 100 http://master.docker-dca.example:8080/

vamos escalar para 10 e aumentar o numero de concorrencia pra 1000 e ver os container trabalhando.

Observe que os containers cairam, não tinham memória suficiente para garantir a entrada e crasharam. Porém o scheduler levantou novamente para atingir o nosso objetivo do service.

Não é obrigatório, mas é necessário configurar limites para os seus containers. Se container roda sem limite ele invade todo o cpu e memória da máquina e pode derrubar todos os outros containers. Veja o gif abaixo.

Remova a stack

docker stack rm wordpress-stack

Q1 = Option 3 Q2 = Option 1 Q3 = Option 1 Q4 = A Q5 = B Q6 = A Q7 = Option 3 Q8 = C Q9 = C