Análise Estática

Uma análise estática confere o código-fonte, sem carregar os binários. É algo que devemos fazer manualmente antes da pipeline e em vários estágios da pipeline para conferir.

- Verificar o código em relação às regras.

- Algumas regras já existem e são fornecidas pela comunidade colaborativa.

- Podemos criar nossas próprias regras para parar os pipelines quando necessário sabendo que outras regras serão conferidas como por exemplo o OPA.

Podemos verificar por exemplo se:

- Os limites de recursos para os pods foram definidos. Sempre é bom que seja para evitar que um pod cresça desenfreadamente.

- Se a service account usada não é a padrão, pois não é bom que seja para granularizar as permissões.

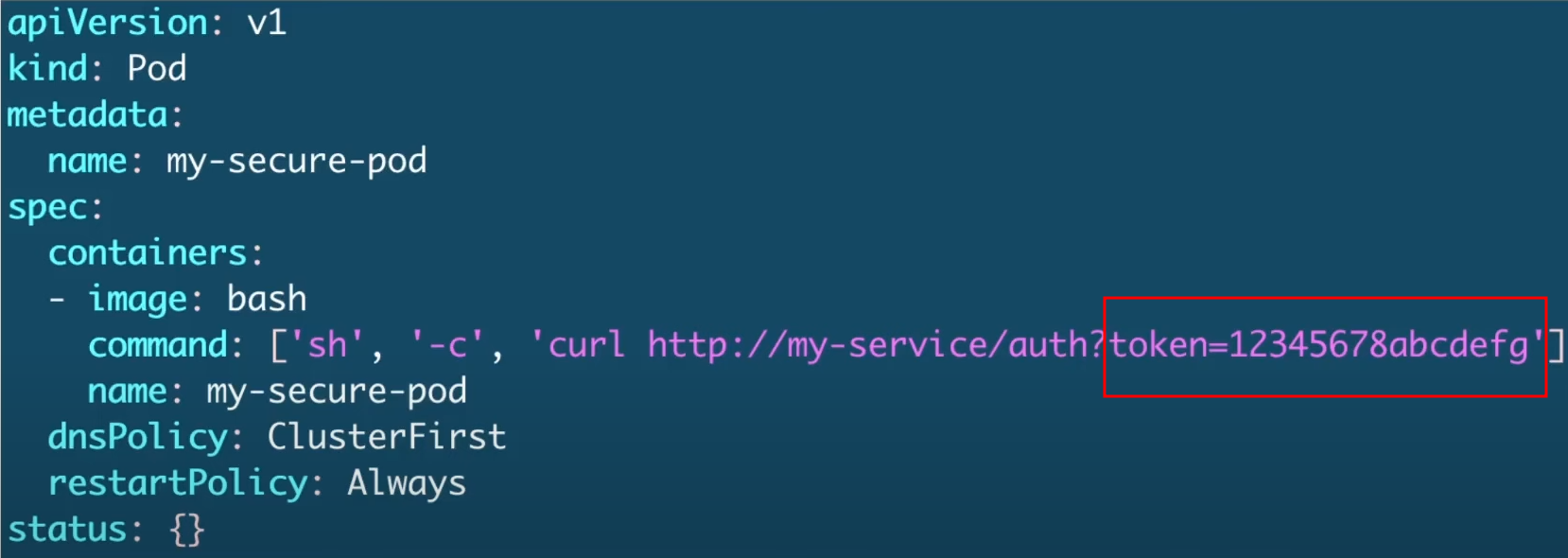

- Estamos referenciando dados sensíveis através de secrets. Não devemos ter valores sensíveis codificados diretamente nos manifestos como variáveis de ambiente ou ConfigMaps.

- Se os Dockerfiles também não possuem dados sensíveis.

- Etc.

Claro que tudo depende do caso de uso do projeto e da empresa.

Se formos colocar análise estática em uma pipeline de CI/CD onde podemos colocar essas análises? Observe que análise estática está em um momento antes do Deploy. A partir deste momento teremos outra análise, a de policies.

Vamos analisar o manifesto abaixo manualmente que seria o primeiro estágio de uma análise estática.

Já podemos observar que estamos passando valores sensíveis codificados diretamente no manifesto e nunca deve ser feito.

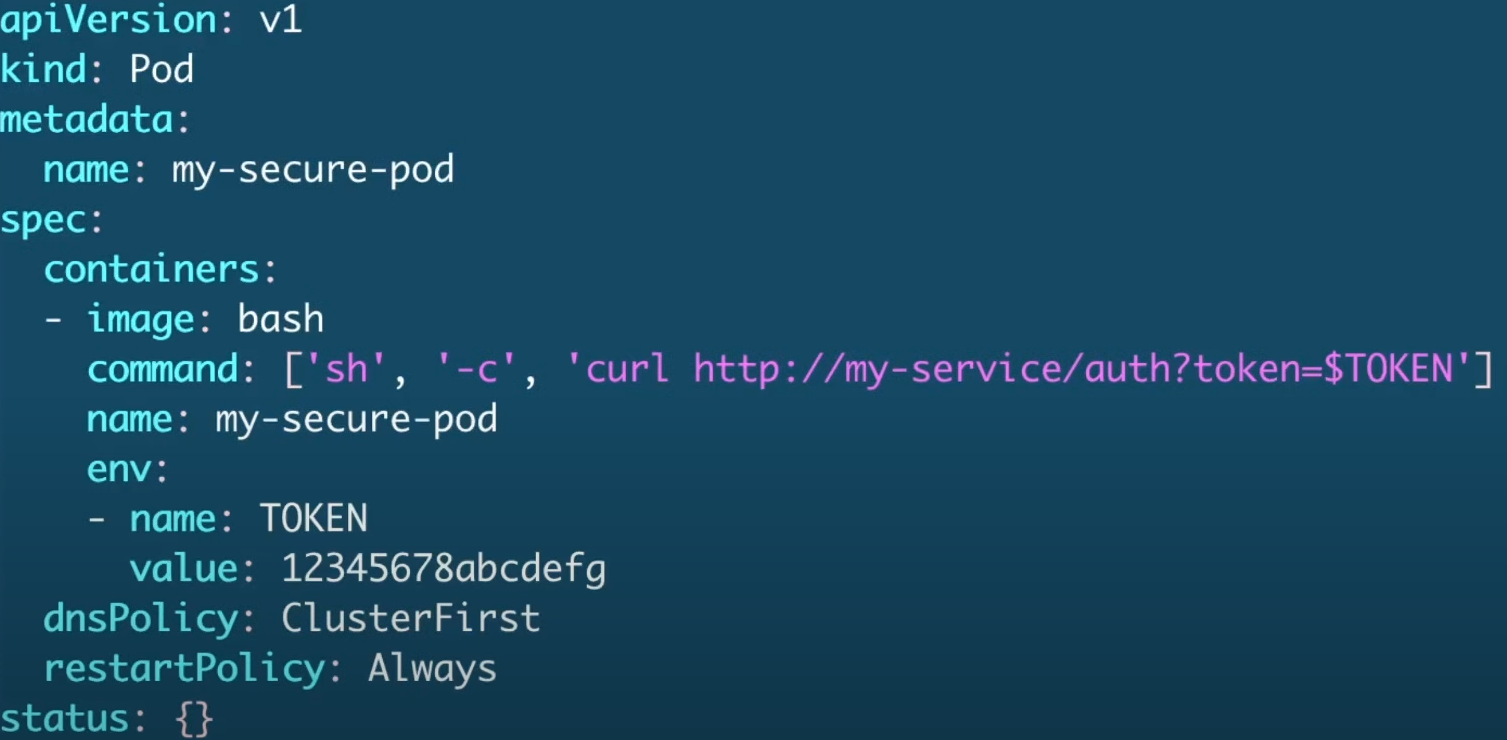

Isso só muda a referência, mas claramente está exposto.

Isso já parece melhor, mas ainda não é o melhor cenário, pois poderíamos usar secrets. ConfigMaps não são secrets, parece, mas não é!

E esse seria o melhor cenário. Secrets são armazenados com melhor segurança do que ConfigMaps e podem ser criptografados. Temos também outras opções para gerenciamento de secrets de forma externa, mas nos limites do CKS é isso.

Podemos colocar plugins nas nossas IDEs que podem facilitar durante o desenvolvimento, mas não para a prova do CKS.

Kubesec

Kubesec é uma ferramenta open source que ajuda na análise de risco para os recursos do Kubernetes.

Faz uma análise estática do manifesto e confronta as melhores práticas de segurança para nos dar insights de melhoria. A ferramenta não faz a correção, apenas verificação e gera relatório.

Podemos utilizar o kubesec através de:

- Binário (local ou pipelines)

- Docker Container (local ou pipelines).

- Kubectl plugins

- Uma vez instalado o binário podemos usá-lo por dentro do kubectl.

- Admission Controller (kubesec-webhook)

Sabendo disso podemos usá-la em vários estágios, desde o momento que estamos desenvolvendo e durante o CI e CD.

Vamos testar uma análise sem instalar o binário, apenas com o docker.

root@cks-master:~# k run nginx --image=nginx -oyaml --dry-run=client > nginx.yaml

root@cks-master:~# cat nginx.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx

name: nginx

spec:

containers:

- image: nginx

name: nginx

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

# Observe que vamos analisar o arquivos sem criar o pod, não esta rodando no sistema.

# Rodando com o docker passando o nginx.yaml como o standard input.

root@cks-master:~# docker run -i kubesec/kubesec:512c5e0 scan /dev/stdin < nginx.yaml

Unable to find image 'kubesec/kubesec:512c5e0' locally

512c5e0: Pulling from kubesec/kubesec

c87736221ed0: Pull complete

5dfbfe40753f: Pull complete

0ab7f5410346: Pull complete

b91424b4f19c: Pull complete

0cff159cca1a: Pull complete

32836ab12770: Pull complete

Digest: sha256:8b1e0856fc64cabb1cf91fea6609748d3b3ef204a42e98d0e20ebadb9131bcb7

Status: Downloaded newer image for kubesec/kubesec:512c5e0

[

{

"object": "Pod/ngix.default",

"valid": true,

"message": "Passed with a score of 0 points",

"score": 0,

"scoring": {

# Alguns conselhos...

"advise": [

{

"selector": "containers[] .securityContext .readOnlyRootFilesystem == true",

"reason": "An immutable root filesystem can prevent malicious binaries being added to PATH and increase attack cost"

},

{

"selector": "containers[] .resources .limits .cpu",

"reason": "Enforcing CPU limits prevents DOS via resource exhaustion"

},

{

"selector": "containers[] .resources .requests .memory",

"reason": "Enforcing memory requests aids a fair balancing of resources across the cluster"

},

{

"selector": "containers[] .securityContext .runAsNonRoot == true",

"reason": "Force the running image to run as a non-root user to ensure least privilege"

},

{

"selector": "containers[] .resources .limits .memory",

"reason": "Enforcing memory limits prevents DOS via resource exhaustion"

},

{

"selector": ".spec .serviceAccountName",

"reason": "Service accounts restrict Kubernetes API access and should be configured with least privilege"

},

{

"selector": "containers[] .securityContext .runAsUser -gt 10000",

"reason": "Run as a high-UID user to avoid conflicts with the host's user table"

},

{

"selector": ".metadata .annotations .\"container.seccomp.security.alpha.kubernetes.io/pod\"",

"reason": "Seccomp profiles set minimum privilege and secure against unknown threats"

},

{

"selector": ".metadata .annotations .\"container.apparmor.security.beta.kubernetes.io/nginx\"",

"reason": "Well defined AppArmor policies may provide greater protection from unknown threats. WARNING: NOT PRODUCTION READY"

},

{

"selector": "containers[] .securityContext .capabilities .drop",

"reason": "Reducing kernel capabilities available to a container limits its attack surface"

},

{

"selector": "containers[] .resources .requests .cpu",

"reason": "Enforcing CPU requests aids a fair balancing of resources across the cluster"

},

{

"selector": "containers[] .securityContext .capabilities .drop | index(\"ALL\")",

"reason": "Drop all capabilities and add only those required to reduce syscall attack surface"

}

]

}

}

]

Implementando algumas melhorias adicionando o recursos, service account e security context.

root@cks-master:~# vim nginx.yaml

root@cks-master:~# cat nginx.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx

name: nginx

spec:

containers:

- image: nginx

name: nginx

resources:

requests:

memory: "128Mi"

cpu: "250m"

limits:

memory: "256Mi"

cpu: "500m"

securityContext:

runAsNonRoot: true

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

root@cks-master:~# docker run -i kubesec/kubesec:512c5e0 scan /dev/stdin < nginx.yaml

[

{

"object": "Pod/nginx.default",

"valid": true,

"message": "Passed with a score of 6 points",

"score": 6, # O score melhorou

"scoring": {

"advise": [

{

"selector": "containers[] .securityContext .readOnlyRootFilesystem == true",

"reason": "An immutable root filesystem can prevent malicious binaries being added to PATH and increase attack cost"

},

{

"selector": "containers[] .securityContext .capabilities .drop",

"reason": "Reducing kernel capabilities available to a container limits its attack surface"

},

{

"selector": "containers[] .securityContext .capabilities .drop | index(\"ALL\")",

"reason": "Drop all capabilities and add only those required to reduce syscall attack surface"

},

{

"selector": "containers[] .securityContext .runAsUser -gt 10000",

"reason": "Run as a high-UID user to avoid conflicts with the host's user table"

},

{

"selector": ".metadata .annotations .\"container.seccomp.security.alpha.kubernetes.io/pod\"",

"reason": "Seccomp profiles set minimum privilege and secure against unknown threats"

},

{

"selector": ".metadata .annotations .\"container.apparmor.security.beta.kubernetes.io/nginx\"",

"reason": "Well defined AppArmor policies may provide greater protection from unknown threats. WARNING: NOT PRODUCTION READY"

}

]

}

}

]

Vamos melhorar mais...

cat pod-nginx.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx

name: nginx

spec:

serviceAccountName: nginx

containers:

- image: nginx

name: nginx

resources:

requests:

memory: "128Mi"

cpu: "250m"

limits:

memory: "256Mi"

cpu: "500m"

securityContext:

runAsNonRoot: true

runAsUser: 10001

readOnlyRootFilesystem: true

capabilities:

drop:

- ALL

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

Conferinfo...

root@cks-master:~# docker run -i kubesec/kubesec:512c5e0 scan /dev/stdin < nginx.yaml

[

{

"object": "Pod/nginx.default",

"valid": true,

"message": "Passed with a score of 12 points",

"score": 12,

"scoring": {

"advise": [

{

"selector": ".metadata .annotations .\"container.seccomp.security.alpha.kubernetes.io/pod\"",

"reason": "Seccomp profiles set minimum privilege and secure against unknown threats"

},

{

"selector": ".metadata .annotations .\"container.apparmor.security.beta.kubernetes.io/nginx\"",

"reason": "Well defined AppArmor policies may provide greater protection from unknown threats. WARNING: NOT PRODUCTION READY"

}

]

}

}

]

Só sobrou o apparmor e seccomp que veremos mais pra frente no curso. Vamos aplicar para ver o que conseguimos rodar.

# Criando o service account que vamos usar

root@cks-master:~# k create sa nginx

serviceaccount/nginx created

root@cks-master:~# k apply -f nginx.yaml

pod/nginx created

root@cks-master:~# k get pods

NAME READY STATUS RESTARTS AGE

nginx 0/1 CrashLoopBackOff 1 (6s ago) 9s

# Vamos procurar o problema

root@cks-master:~# k describe pod nginx

...

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-dfr9z:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 18s default-scheduler Successfully assigned default/nginx to cks-worker

Normal Pulled 17s kubelet Successfully pulled image "nginx" in 387ms (387ms including waiting). Image size: 71026652 bytes.

Normal Pulling 16s (x2 over 17s) kubelet Pulling image "nginx"

Normal Created 15s (x2 over 17s) kubelet Created container nginx

Normal Started 15s (x2 over 17s) kubelet Started container nginx

Normal Pulled 15s kubelet Successfully pulled image "nginx" in 357ms (357ms including waiting). Image size: 71026652 bytes.

Warning BackOff 14s (x2 over 15s) kubelet Back-off restarting failed container nginx in pod nginx_default(f9d3097e-95d4-4da2-a184-bb2e0d4907f4)

root@cks-master:~# k logs pods/nginx --tail 10

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: can not modify /etc/nginx/conf.d/default.conf (read-only file system?)

/docker-entrypoint.sh: Sourcing /docker-entrypoint.d/15-local-resolvers.envsh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2024/09/02 19:35:05 [warn] 1#1: the "user" directive makes sense only if the master process runs with super-user privileges, ignored in /etc/nginx/nginx.conf:2

nginx: [warn] the "user" directive makes sense only if the master process runs with super-user privileges, ignored in /etc/nginx/nginx.conf:2

2024/09/02 19:35:05 [emerg] 1#1: mkdir() "/var/cache/nginx/client_temp" failed (30: Read-only file system)

nginx: [emerg] mkdir() "/var/cache/nginx/client_temp" failed (30: Read-only file system)

Seguindo as regras perdemos alguams permissões que eram contornadas por ser usuário root e colocamos o filesystem como readonly.

Precisamos que o nginx funcione desta maneira, poderíamos usar uma imagem preparada para o usuário como vimos anteriomente, mas vamos tentar resolver.

Em todos os diretórios que ele precisar escrever podemos criar um emptydir para resolver o problema.

Para a configuração do nginx podemos criar um configmap que será no nginx o /etc/nginx/conf.d/ onde ele buscará as configurações do nginx e criar outros diretórios para o nginx escrever.

root@cks-master:~# vim configmap.yaml

root@cks-master:~# cat configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config-map

data:

default.conf: |

server {

listen 8080;

server_name localhost;

location / {

root /usr/share/nginx/html;

index index.html;

}

}

root@cks-master:~# k apply -f configmap.yaml

configmap/nginx-config-map created

root@cks-master:~# vim nginx.yaml

root@cks-master:~# cat nginx.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx

name: nginx

spec:

serviceAccountName: nginx

containers:

- image: nginx

name: nginx

resources:

requests:

memory: "128Mi"

cpu: "250m"

limits:

memory: "256Mi"

cpu: "500m"

securityContext:

runAsNonRoot: true

runAsUser: 10001

readOnlyRootFilesystem: true

capabilities:

drop:

- ALL

volumeMounts:

- name: nginx-cache

mountPath: /var/cache/nginx

- name: nginx-config

mountPath: /etc/nginx/conf.d/default.conf

subPath: default.conf

- name: nginx-run

mountPath: /var/run/

volumes:

- name: nginx-cache

emptyDir: {}

- name: nginx-config

configMap:

name: nginx-config-map

- name: nginx-run

emptyDir: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

root@cks-master:~# k apply -f nginx.yaml

pod/nginx created

root@cks-master:~# k get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 3s

Voltando ao assunto do apparmoar e seccomp, vamos resolver só para ficar bonito!

root@cks-master:~# vim nginx.yaml

root@cks-master:~# cat nginx.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx

name: nginx

# Adicionando essas annotations como pediu no kubesec

annotations:

container.seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

container.apparmor.security.beta.kubernetes.io/nginx: 'runtime/default'

spec:

serviceAccountName: nginx

containers:

- image: nginx

name: nginx

resources:

requests:

memory: "128Mi"

cpu: "250m"

limits:

memory: "256Mi"

cpu: "500m"

securityContext:

runAsNonRoot: true

runAsUser: 10001

readOnlyRootFilesystem: true

capabilities:

drop:

- ALL

# Ao aplicar o pod observamos que a partir da 1.30 a a annotation será deprecisada referente ao apparmor e provavelmente o kubesec terá que se ajustar para olhar aqui.

appArmorProfile:

type: RuntimeDefault

volumeMounts:

- name: nginx-cache

mountPath: /var/cache/nginx

- name: nginx-config

mountPath: /etc/nginx/conf.d/default.conf

subPath: default.conf

- name: nginx-run

mountPath: /var/run/

volumes:

- name: nginx-cache

emptyDir: {}

- name: nginx-config

configMap:

name: nginx-config-map

- name: nginx-run

emptyDir: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

# Resolvido no score máximo

root@cks-master:~# docker run -i kubesec/kubesec:512c5e0 scan /dev/stdin < nginx.yaml

[

{

"object": "Pod/nginx.default",

"valid": true,

"message": "Passed with a score of 16 points",

"score": 16,

"scoring": {}

}

]

root@cks-master:~# k apply -f nginx.yaml

pod/nginx created

root@cks-master:~# k get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 5s

root@cks-master:~# k logs pods/nginx --tail 5

2024/09/02 19:54:03 [notice] 1#1: OS: Linux 5.15.0-1067-gcp

2024/09/02 19:54:03 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2024/09/02 19:54:03 [notice] 1#1: start worker processes

2024/09/02 19:54:03 [notice] 1#1: start worker process 21

2024/09/02 19:54:03 [notice] 1#1: start worker process 22

Podemos instalar o binário e fazer a mesma coisa

root@cks-master:~# wget https://github.com/controlplaneio/kubesec/releases/download/v2.14.1/kubesec_linux_amd64.tar.gz

netes-server-linux-amd64.tar.gz kubesec_linux_amd64.tar.gz

root@cks-master:~# tar -xzvf kubesec_linux_amd64.tar.gz

CHANGELOG.md

LICENSE

README.md

kubesec

root@cks-master:~# chmod +x kubesec

root@cks-master:~# mv kubesec /usr/local/bin/

root@cks-master:~# kubesec version

version 2.14.1

git commit 2d31e51659470451e6c51cb47be1df9e103fb49d

build date 2024-08-19T11:48:06Z

#Só para comparar com a versão do docker, não conseguimos, provalmente esse container foi buildado diretamente com o projeto.

# Olhando na página dockerhub no kubesec vimos que essa imagem tem 5 anos e esta na documentação oficial da ferramenta. https://hub.docker.com/r/kubesec/kubesec/tags?page=&page_size=&ordering=&name=512c5e0

root@cks-master:~# docker run -i kubesec/kubesec:512c5e0 version

version unknown

git commit unknown

build date unknown

# Seria a mesma saída que o comando abaixo, mudando para a versão mais nova.

# root@cks-master:~# docker run -i kubesec/kubesec:v2.14.1 scan /dev/stdin < nginx.yaml

root@cks-master:~# kubesec scan nginx.yaml

[

{

"object": "Pod/nginx.default",

"valid": true,

"fileName": "nginx.yaml",

"message": "Passed with a score of 16 points",

"score": 16,

"scoring": {

"passed": [

{

"id": "ApparmorAny",

"selector": ".metadata .annotations .\"container.apparmor.security.beta.kubernetes.io/nginx\"",

"reason": "Well defined AppArmor policies may provide greater protection from unknown threats. WARNING: NOT PRODUCTION READY",

"points": 3

},

{

"id": "ServiceAccountName",

"selector": ".spec .serviceAccountName",

"reason": "Service accounts restrict Kubernetes API access and should be configured with least privilege",

"points": 3

},

{

"id": "SeccompAny",

"selector": ".metadata .annotations .\"container.seccomp.security.alpha.kubernetes.io/pod\"",

"reason": "Seccomp profiles set minimum privilege and secure against unknown threats",

"points": 1

},

{

"id": "RunAsNonRoot",

"selector": ".spec, .spec.containers[] | .securityContext .runAsNonRoot == true",

"reason": "Force the running image to run as a non-root user to ensure least privilege",

"points": 1

},

{

"id": "RunAsUser",

"selector": ".spec, .spec.containers[] | .securityContext .runAsUser -gt 10000",

"reason": "Run as a high-UID user to avoid conflicts with the host's users",

"points": 1

},

{

"id": "LimitsCPU",

"selector": "containers[] .resources .limits .cpu",

"reason": "Enforcing CPU limits prevents DOS via resource exhaustion",

"points": 1

},

{

"id": "LimitsMemory",

"selector": "containers[] .resources .limits .memory",

"reason": "Enforcing memory limits prevents DOS via resource exhaustion",

"points": 1

},

{

"id": "RequestsCPU",

"selector": "containers[] .resources .requests .cpu",

"reason": "Enforcing CPU requests aids a fair balancing of resources across the cluster",

"points": 1

},

{

"id": "RequestsMemory",

"selector": "containers[] .resources .requests .memory",

"reason": "Enforcing memory requests aids a fair balancing of resources across the cluster",

"points": 1

},

{

"id": "CapDropAny",

"selector": "containers[] .securityContext .capabilities .drop",

"reason": "Reducing kernel capabilities available to a container limits its attack surface",

"points": 1

},

{

"id": "CapDropAll",

"selector": "containers[] .securityContext .capabilities .drop | index(\"ALL\")",

"reason": "Drop all capabilities and add only those required to reduce syscall attack surface",

"points": 1

},

{

"id": "ReadOnlyRootFilesystem",

"selector": "containers[] .securityContext .readOnlyRootFilesystem == true",

"reason": "An immutable root filesystem can prevent malicious binaries being added to PATH and increase attack cost",

"points": 1

}

],

"advise": [

{

"id": "AutomountServiceAccountToken",

"selector": ".spec .automountServiceAccountToken == false",

"reason": "Disabling the automounting of Service Account Token reduces the attack surface of the API server",

"points": 1

},

{

"id": "RunAsGroup",

"selector": ".spec, .spec.containers[] | .securityContext .runAsGroup -gt 10000",

"reason": "Run as a high-UID group to avoid conflicts with the host's groups",

"points": 1

}

]

}

}

]

Acabamos encontrando mais melhorias. Podemos observar que agora ele mostra os itens que passou, mas já temos mais dois novos advises.

root@cks-master:~# vim nginx.yaml

root@cks-master:~# cat nginx.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx

name: nginx

annotations:

container.seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

container.apparmor.security.beta.kubernetes.io/nginx: 'runtime/default'

spec:

serviceAccountName: nginx

automountServiceAccountToken: false # Adicionado

containers:

- image: nginx

name: nginx

resources:

requests:

memory: "128Mi"

cpu: "250m"

limits:

memory: "256Mi"

cpu: "500m"

securityContext:

runAsNonRoot: true

runAsUser: 10001

runAsGroup: 10001 # Adicionado

readOnlyRootFilesystem: true

capabilities:

drop:

- ALL

appArmorProfile:

type: RuntimeDefault

volumeMounts:

- name: nginx-cache

mountPath: /var/cache/nginx

- name: nginx-config

mountPath: /etc/nginx/conf.d/default.conf

subPath: default.conf

- name: nginx-run

mountPath: /var/run/

volumes:

- name: nginx-cache

emptyDir: {}

- name: nginx-config

configMap:

name: nginx-config-map

- name: nginx-run

emptyDir: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

root@cks-master:~# kubesec scan nginx.yaml

[

{

"object": "Pod/nginx.default",

"valid": true,

"fileName": "nginx.yaml",

"message": "Passed with a score of 18 points",

"score": 18,

"scoring": {

"passed": [

{

"id": "ApparmorAny",

"selector": ".metadata .annotations .\"container.apparmor.security.beta.kubernetes.io/nginx\"",

"reason": "Well defined AppArmor policies may provide greater protection from unknown threats. WARNING: NOT PRODUCTION READY",

"points": 3

},

{

"id": "ServiceAccountName",

"selector": ".spec .serviceAccountName",

"reason": "Service accounts restrict Kubernetes API access and should be configured with least privilege",

"points": 3

},

{

"id": "SeccompAny",

"selector": ".metadata .annotations .\"container.seccomp.security.alpha.kubernetes.io/pod\"",

"reason": "Seccomp profiles set minimum privilege and secure against unknown threats",

"points": 1

},

{

"id": "AutomountServiceAccountToken",

"selector": ".spec .automountServiceAccountToken == false",

"reason": "Disabling the automounting of Service Account Token reduces the attack surface of the API server",

"points": 1

},

{

"id": "RunAsGroup",

"selector": ".spec, .spec.containers[] | .securityContext .runAsGroup -gt 10000",

"reason": "Run as a high-UID group to avoid conflicts with the host's groups",

"points": 1

},

{

"id": "RunAsNonRoot",

"selector": ".spec, .spec.containers[] | .securityContext .runAsNonRoot == true",

"reason": "Force the running image to run as a non-root user to ensure least privilege",

"points": 1

},

{

"id": "RunAsUser",

"selector": ".spec, .spec.containers[] | .securityContext .runAsUser -gt 10000",

"reason": "Run as a high-UID user to avoid conflicts with the host's users",

"points": 1

},

{

"id": "LimitsCPU",

"selector": "containers[] .resources .limits .cpu",

"reason": "Enforcing CPU limits prevents DOS via resource exhaustion",

"points": 1

},

{

"id": "LimitsMemory",

"selector": "containers[] .resources .limits .memory",

"reason": "Enforcing memory limits prevents DOS via resource exhaustion",

"points": 1

},

{

"id": "RequestsCPU",

"selector": "containers[] .resources .requests .cpu",

"reason": "Enforcing CPU requests aids a fair balancing of resources across the cluster",

"points": 1

},

{

"id": "RequestsMemory",

"selector": "containers[] .resources .requests .memory",

"reason": "Enforcing memory requests aids a fair balancing of resources across the cluster",

"points": 1

},

{

"id": "CapDropAny",

"selector": "containers[] .securityContext .capabilities .drop",

"reason": "Reducing kernel capabilities available to a container limits its attack surface",

"points": 1

},

{

"id": "CapDropAll",

"selector": "containers[] .securityContext .capabilities .drop | index(\"ALL\")",

"reason": "Drop all capabilities and add only those required to reduce syscall attack surface",

"points": 1

},

{

"id": "ReadOnlyRootFilesystem",

"selector": "containers[] .securityContext .readOnlyRootFilesystem == true",

"reason": "An immutable root filesystem can prevent malicious binaries being added to PATH and increase attack cost",

"points": 1

}

]

}

}

]

root@cks-master:~# k apply -f nginx.yaml

pod/nginx created

root@cks-master:~# k get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 3s