Static Analysis

Static analysis checks source code without loading binaries. It's something we should do manually before the pipeline and at various pipeline stages for verification.

- Check code against rules.

- Some rules already exist and are provided by the collaborative community.

- We can create our own rules to stop pipelines when necessary, knowing that other rules will be checked, such as OPA.

We can check, for example, if:

- Resource limits for pods have been defined. It's always good to define them to prevent a pod from growing uncontrollably.

- The service account used is not the default, as it's not good to use the default to granularize permissions.

- We're referencing sensitive data through secrets. We shouldn't have sensitive values hardcoded directly in manifests as environment variables or ConfigMaps.

- Dockerfiles also don't have sensitive data.

- Etc.

Of course, everything depends on the project's use case and the company.

If we're going to put static analysis in a CI/CD pipeline, where can we place these analyses? Note that static analysis is at a moment before Deploy. From this moment we'll have another analysis, policy analysis.

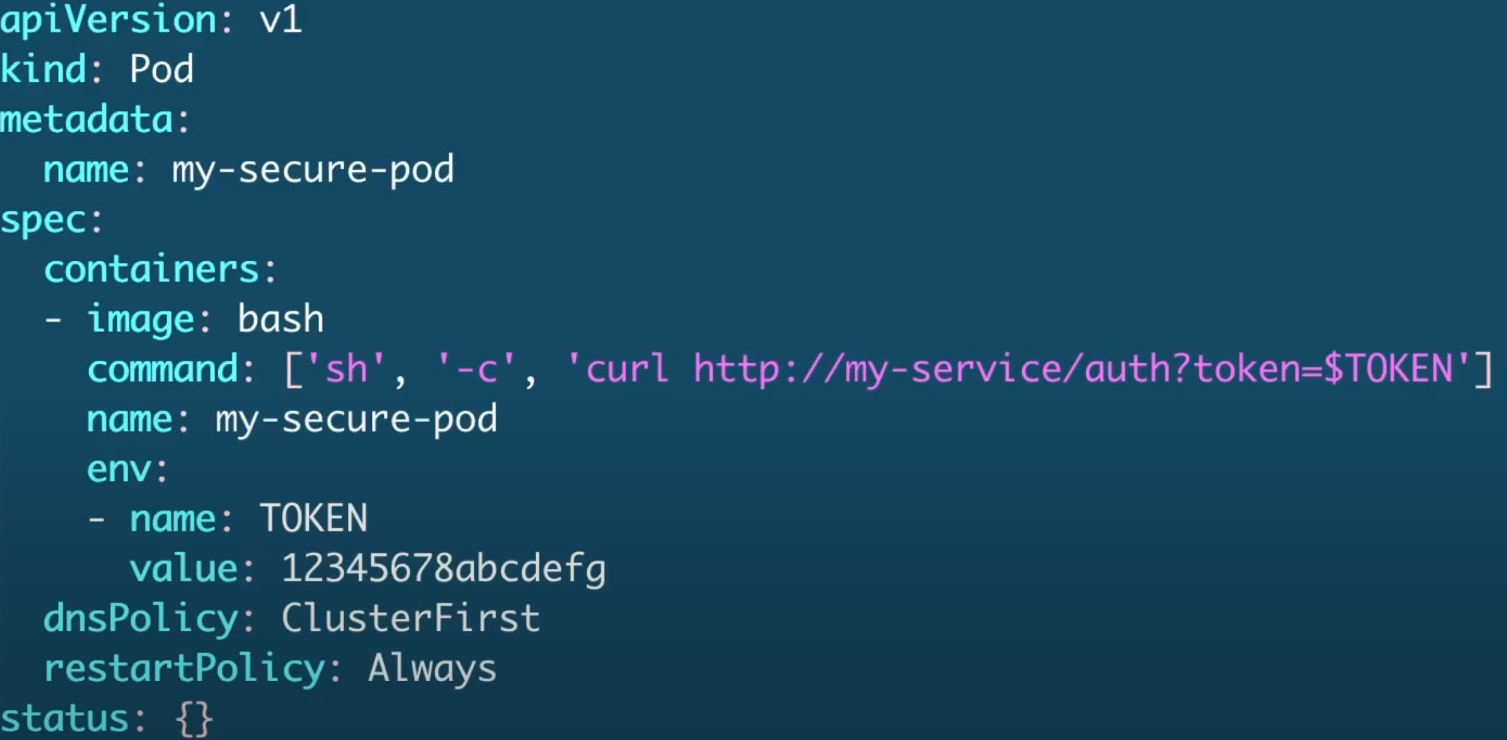

Let's analyze the manifest below manually, which would be the first stage of static analysis.

We can already observe that we're passing sensitive values hardcoded directly in the manifest, and this should never be done.

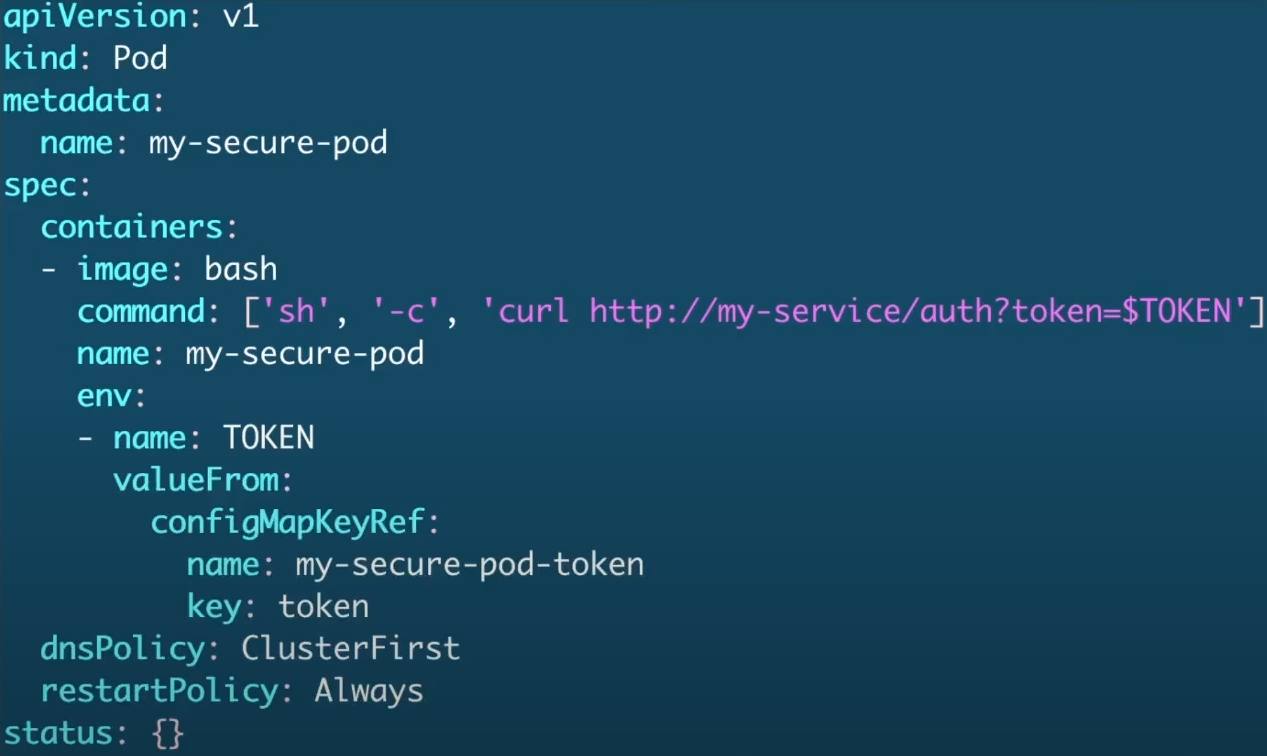

This only changes the reference, but it's clearly exposed.

This already looks better, but it's still not the best scenario, as we could use secrets. ConfigMaps are not secrets, they seem like it, but they're not!

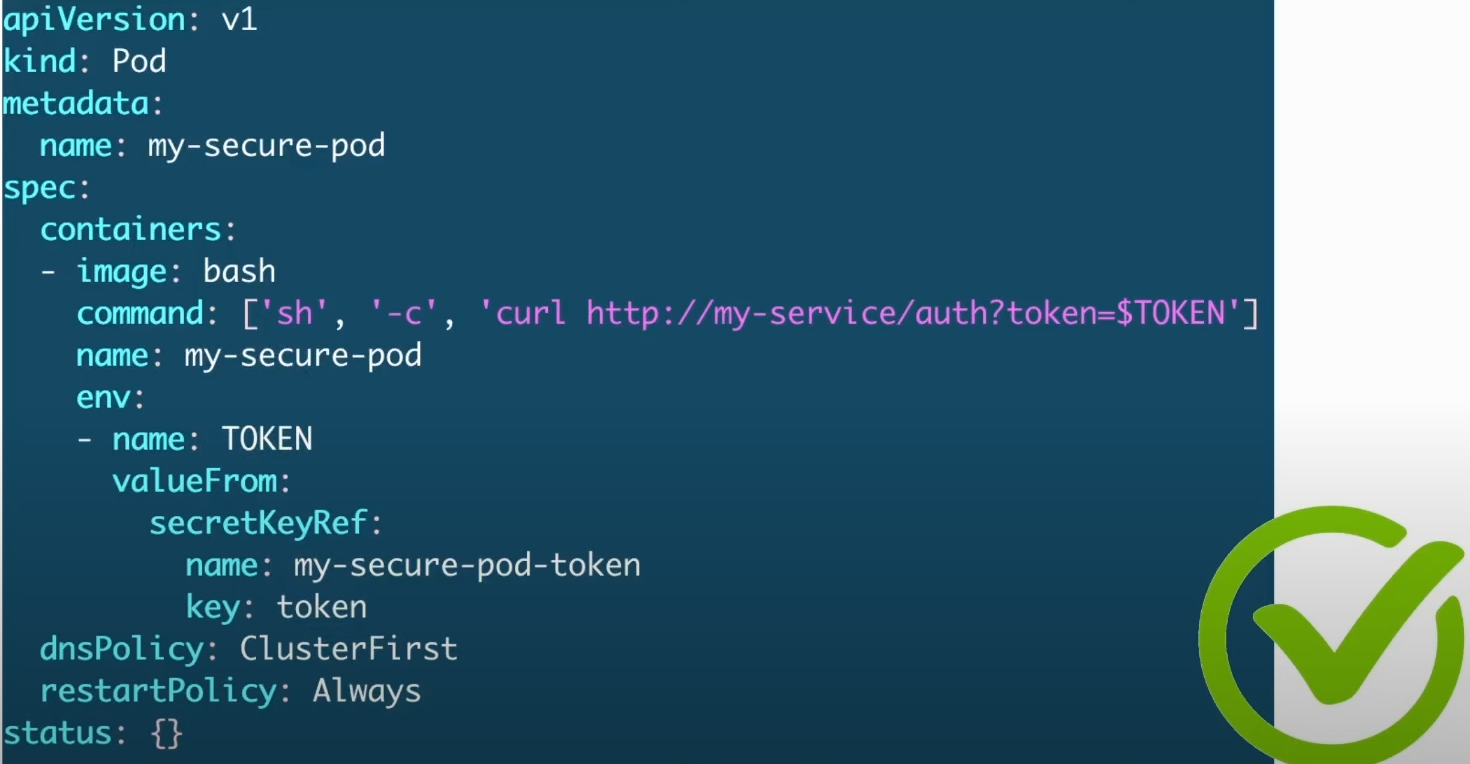

And this would be the best scenario. Secrets are stored with better security than ConfigMaps and can be encrypted. We also have other options for external secret management, but within CKS limits, this is it.

We can put plugins in our IDEs that can help during development, but not for the CKS exam.

Kubesec

Kubesec is an open-source tool that helps with risk analysis for Kubernetes resources.

It performs static analysis of the manifest and compares it against security best practices to give us improvement insights. The tool doesn't make corrections, only verification and report generation.

We can use kubesec through:

- Binary (local or pipelines)

- Docker Container (local or pipelines).

- Kubectl plugins

- Once the binary is installed, we can use it from within kubectl.

- Admission Controller (kubesec-webhook)

Knowing this, we can use it at various stages, from the moment we're developing and during CI and CD.

Let's test an analysis without installing the binary, just with docker.

root@cks-master:~# k run nginx --image=nginx -oyaml --dry-run=client > nginx.yaml

root@cks-master:~# cat nginx.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx

name: nginx

spec:

containers:

- image: nginx

name: nginx

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

# Note that we'll analyze the file without creating the pod, it's not running in the system.

# Running with docker passing nginx.yaml as standard input.

root@cks-master:~# docker run -i kubesec/kubesec:512c5e0 scan /dev/stdin < nginx.yaml

Unable to find image 'kubesec/kubesec:512c5e0' locally

512c5e0: Pulling from kubesec/kubesec

c87736221ed0: Pull complete

5dfbfe40753f: Pull complete

0ab7f5410346: Pull complete

b91424b4f19c: Pull complete

0cff159cca1a: Pull complete

32836ab12770: Pull complete

Digest: sha256:8b1e0856fc64cabb1cf91fea6609748d3b3ef204a42e98d0e20ebadb9131bcb7

Status: Downloaded newer image for kubesec/kubesec:512c5e0

[

{

"object": "Pod/ngix.default",

"valid": true,

"message": "Passed with a score of 0 points",

"score": 0,

"scoring": {

# Some advice...

"advise": [

{

"selector": "containers[] .securityContext .readOnlyRootFilesystem == true",

"reason": "An immutable root filesystem can prevent malicious binaries being added to PATH and increase attack cost"

},

{

"selector": "containers[] .resources .limits .cpu",

"reason": "Enforcing CPU limits prevents DOS via resource exhaustion"

},

{

"selector": "containers[] .resources .requests .memory",

"reason": "Enforcing memory requests aids a fair balancing of resources across the cluster"

},

{

"selector": "containers[] .securityContext .runAsNonRoot == true",

"reason": "Force the running image to run as a non-root user to ensure least privilege"

},

{

"selector": "containers[] .resources .limits .memory",

"reason": "Enforcing memory limits prevents DOS via resource exhaustion"

},

{

"selector": ".spec .serviceAccountName",

"reason": "Service accounts restrict Kubernetes API access and should be configured with least privilege"

},

{

"selector": "containers[] .securityContext .runAsUser -gt 10000",

"reason": "Run as a high-UID user to avoid conflicts with the host's user table"

},

{

"selector": ".metadata .annotations .\"container.seccomp.security.alpha.kubernetes.io/pod\"",

"reason": "Seccomp profiles set minimum privilege and secure against unknown threats"

},

{

"selector": ".metadata .annotations .\"container.apparmor.security.beta.kubernetes.io/nginx\"",

"reason": "Well defined AppArmor policies may provide greater protection from unknown threats. WARNING: NOT PRODUCTION READY"

},

{

"selector": "containers[] .securityContext .capabilities .drop",

"reason": "Reducing kernel capabilities available to a container limits its attack surface"

},

{

"selector": "containers[] .resources .requests .cpu",

"reason": "Enforcing CPU requests aids a fair balancing of resources across the cluster"

},

{

"selector": "containers[] .securityContext .capabilities .drop | index(\"ALL\")",

"reason": "Drop all capabilities and add only those required to reduce syscall attack surface"

}

]

}

}

]

Implementing some improvements by adding resources, service account, and security context.

root@cks-master:~# vim nginx.yaml

root@cks-master:~# cat nginx.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx

name: nginx

spec:

containers:

- image: nginx

name: nginx

resources:

requests:

memory: "128Mi"

cpu: "250m"

limits:

memory: "256Mi"

cpu: "500m"

securityContext:

runAsNonRoot: true

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

root@cks-master:~# docker run -i kubesec/kubesec:512c5e0 scan /dev/stdin < nginx.yaml

[

{

"object": "Pod/nginx.default",

"valid": true,

"message": "Passed with a score of 6 points",

"score": 6, # The score improved

"scoring": {

"advise": [

{

"selector": "containers[] .securityContext .readOnlyRootFilesystem == true",

"reason": "An immutable root filesystem can prevent malicious binaries being added to PATH and increase attack cost"

},

{

"selector": "containers[] .securityContext .capabilities .drop",

"reason": "Reducing kernel capabilities available to a container limits its attack surface"

},

{

"selector": "containers[] .securityContext .capabilities .drop | index(\"ALL\")",

"reason": "Drop all capabilities and add only those required to reduce syscall attack surface"

},

{

"selector": "containers[] .securityContext .runAsUser -gt 10000",

"reason": "Run as a high-UID user to avoid conflicts with the host's user table"

},

{

"selector": ".metadata .annotations .\"container.seccomp.security.alpha.kubernetes.io/pod\"",

"reason": "Seccomp profiles set minimum privilege and secure against unknown threats"

},

{

"selector": ".metadata .annotations .\"container.apparmor.security.beta.kubernetes.io/nginx\"",

"reason": "Well defined AppArmor policies may provide greater protection from unknown threats. WARNING: NOT PRODUCTION READY"

}

]

}

}

]

Let's improve more...

cat pod-nginx.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx

name: nginx

spec:

serviceAccountName: nginx

containers:

- image: nginx

name: nginx

resources:

requests:

memory: "128Mi"

cpu: "250m"

limits:

memory: "256Mi"

cpu: "500m"

securityContext:

runAsNonRoot: true

runAsUser: 10001

readOnlyRootFilesystem: true

capabilities:

drop:

- ALL

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

Checking...

root@cks-master:~# docker run -i kubesec/kubesec:512c5e0 scan /dev/stdin < nginx.yaml

[

{

"object": "Pod/nginx.default",

"valid": true,

"message": "Passed with a score of 12 points",

"score": 12,

"scoring": {

"advise": [

{

"selector": ".metadata .annotations .\"container.seccomp.security.alpha.kubernetes.io/pod\"",

"reason": "Seccomp profiles set minimum privilege and secure against unknown threats"

},

{

"selector": ".metadata .annotations .\"container.apparmor.security.beta.kubernetes.io/nginx\"",

"reason": "Well defined AppArmor policies may provide greater protection from unknown threats. WARNING: NOT PRODUCTION READY"

}

]

}

}

]

Only apparmor and seccomp remain, which we'll see later in the course. Let's apply to see what we can run.

# Creating the service account we'll use

root@cks-master:~# k create sa nginx

serviceaccount/nginx created

root@cks-master:~# k apply -f nginx.yaml

pod/nginx created

root@cks-master:~# k get pods

NAME READY STATUS RESTARTS AGE

nginx 0/1 CrashLoopBackOff 1 (6s ago) 9s

# Let's find the problem

root@cks-master:~# k describe pod nginx

...

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-dfr9z:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 18s default-scheduler Successfully assigned default/nginx to cks-worker

Normal Pulled 17s kubelet Successfully pulled image "nginx" in 387ms (387ms including waiting). Image size: 71026652 bytes.

Normal Pulling 16s (x2 over 17s) kubelet Pulling image "nginx"

Normal Created 15s (x2 over 17s) kubelet Created container nginx

Normal Started 15s (x2 over 17s) kubelet Started container nginx

Normal Pulled 15s kubelet Successfully pulled image "nginx" in 357ms (357ms including waiting). Image size: 71026652 bytes.

Warning BackOff 14s (x2 over 15s) kubelet Back-off restarting failed container nginx in pod nginx_default(f9d3097e-95d4-4da2-a184-bb2e0d4907f4)

root@cks-master:~# k logs pods/nginx --tail 10

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: can not modify /etc/nginx/conf.d/default.conf (read-only file system?)

/docker-entrypoint.sh: Sourcing /docker-entrypoint.d/15-local-resolvers.envsh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2024/09/02 19:35:05 [warn] 1#1: the "user" directive makes sense only if the master process runs with super-user privileges, ignored in /etc/nginx/nginx.conf:2

nginx: [warn] the "user" directive makes sense only if the master process runs with super-user privileges, ignored in /etc/nginx/nginx.conf:2

2024/09/02 19:35:05 [emerg] 1#1: mkdir() "/var/cache/nginx/client_temp" failed (30: Read-only file system)

nginx: [emerg] mkdir() "/var/cache/nginx/client_temp" failed (30: Read-only file system)

Following the rules, we lost some permissions that were bypassed by being root user, and we set the filesystem as readonly.

We need nginx to work this way. We could use an image prepared for the user as we saw previously, but let's try to solve it.

In all directories where it needs to write, we can create an emptydir to solve the problem.

For nginx configuration, we can create a configmap that will be in nginx at /etc/nginx/conf.d/ where it will look for nginx configurations and create other directories for nginx to write.

root@cks-master:~# vim configmap.yaml

root@cks-master:~# cat configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config-map

data:

default.conf: |

server {

listen 8080;

server_name localhost;

location / {

root /usr/share/nginx/html;

index index.html;

}

}

root@cks-master:~# k apply -f configmap.yaml

configmap/nginx-config-map created

root@cks-master:~# vim nginx.yaml

root@cks-master:~# cat nginx.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx

name: nginx

spec:

serviceAccountName: nginx

containers:

- image: nginx

name: nginx

resources:

requests:

memory: "128Mi"

cpu: "250m"

limits:

memory: "256Mi"

cpu: "500m"

securityContext:

runAsNonRoot: true

runAsUser: 10001

readOnlyRootFilesystem: true

capabilities:

drop:

- ALL

volumeMounts:

- name: nginx-cache

mountPath: /var/cache/nginx

- name: nginx-config

mountPath: /etc/nginx/conf.d/default.conf

subPath: default.conf

- name: nginx-run

mountPath: /var/run/

volumes:

- name: nginx-cache

emptyDir: {}

- name: nginx-config

configMap:

name: nginx-config-map

- name: nginx-run

emptyDir: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

root@cks-master:~# k apply -f nginx.yaml

pod/nginx created

root@cks-master:~# k get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 3s

Going back to the apparmor and seccomp topic, let's solve it just to make it look good!

root@cks-master:~# vim nginx.yaml

root@cks-master:~# cat nginx.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx

name: nginx

# Adding these annotations as requested by kubesec

annotations:

container.seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

container.apparmor.security.beta.kubernetes.io/nginx: 'runtime/default'

spec:

serviceAccountName: nginx

containers:

- image: nginx

name: nginx

resources:

requests:

memory: "128Mi"

cpu: "250m"

limits:

memory: "256Mi"

cpu: "500m"

securityContext:

runAsNonRoot: true

runAsUser: 10001

readOnlyRootFilesystem: true

capabilities:

drop:

- ALL

# When applying the pod we observe that starting from 1.30 the apparmor annotation will be deprecated and kubesec will probably need to adjust to look here.

appArmorProfile:

type: RuntimeDefault

volumeMounts:

- name: nginx-cache

mountPath: /var/cache/nginx

- name: nginx-config

mountPath: /etc/nginx/conf.d/default.conf

subPath: default.conf

- name: nginx-run

mountPath: /var/run/

volumes:

- name: nginx-cache

emptyDir: {}

- name: nginx-config

configMap:

name: nginx-config-map

- name: nginx-run

emptyDir: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

# Resolved at maximum score

root@cks-master:~# docker run -i kubesec/kubesec:512c5e0 scan /dev/stdin < nginx.yaml

[

{

"object": "Pod/nginx.default",

"valid": true,

"message": "Passed with a score of 16 points",

"score": 16,

"scoring": {}

}

]

root@cks-master:~# k apply -f nginx.yaml

pod/nginx created

root@cks-master:~# k get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 5s

root@cks-master:~# k logs pods/nginx --tail 5

2024/09/02 19:54:03 [notice] 1#1: OS: Linux 5.15.0-1067-gcp

2024/09/02 19:54:03 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2024/09/02 19:54:03 [notice] 1#1: start worker processes

2024/09/02 19:54:03 [notice] 1#1: start worker process 21

2024/09/02 19:54:03 [notice] 1#1: start worker process 22

We can install the binary and do the same thing

root@cks-master:~# wget https://github.com/controlplaneio/kubesec/releases/download/v2.14.1/kubesec_linux_amd64.tar.gz

root@cks-master:~# tar -xzvf kubesec_linux_amd64.tar.gz

CHANGELOG.md

LICENSE

README.md

kubesec

root@cks-master:~# chmod +x kubesec

root@cks-master:~# mv kubesec /usr/local/bin/

root@cks-master:~# kubesec version

version 2.14.1

git commit 2d31e51659470451e6c51cb47be1df9e103fb49d

build date 2024-08-19T11:48:06Z

# Just to compare with the docker version, we can't, probably that container was built directly with the project.

# Looking at the dockerhub page for kubesec, we saw that this image is 5 years old and is in the tool's official documentation. https://hub.docker.com/r/kubesec/kubesec/tags?page=&page_size=&ordering=&name=512c5e0

root@cks-master:~# docker run -i kubesec/kubesec:512c5e0 version

version unknown

git commit unknown

build date unknown

# It would be the same output as the command below, changing to the newer version.

# root@cks-master:~# docker run -i kubesec/kubesec:v2.14.1 scan /dev/stdin < nginx.yaml

root@cks-master:~# kubesec scan nginx.yaml

[

{

"object": "Pod/nginx.default",

"valid": true,

"fileName": "nginx.yaml",

"message": "Passed with a score of 16 points",

"score": 16,

"scoring": {

"passed": [

{

"id": "ApparmorAny",

"selector": ".metadata .annotations .\"container.apparmor.security.beta.kubernetes.io/nginx\"",

"reason": "Well defined AppArmor policies may provide greater protection from unknown threats. WARNING: NOT PRODUCTION READY",

"points": 3

},

{

"id": "ServiceAccountName",

"selector": ".spec .serviceAccountName",

"reason": "Service accounts restrict Kubernetes API access and should be configured with least privilege",

"points": 3

},

{

"id": "SeccompAny",

"selector": ".metadata .annotations .\"container.seccomp.security.alpha.kubernetes.io/pod\"",

"reason": "Seccomp profiles set minimum privilege and secure against unknown threats",

"points": 1

},

{

"id": "RunAsNonRoot",

"selector": ".spec, .spec.containers[] | .securityContext .runAsNonRoot == true",

"reason": "Force the running image to run as a non-root user to ensure least privilege",

"points": 1

},

{

"id": "RunAsUser",

"selector": ".spec, .spec.containers[] | .securityContext .runAsUser -gt 10000",

"reason": "Run as a high-UID user to avoid conflicts with the host's users",

"points": 1

},

{

"id": "LimitsCPU",

"selector": "containers[] .resources .limits .cpu",

"reason": "Enforcing CPU limits prevents DOS via resource exhaustion",

"points": 1

},

{

"id": "LimitsMemory",

"selector": "containers[] .resources .limits .memory",

"reason": "Enforcing memory limits prevents DOS via resource exhaustion",

"points": 1

},

{

"id": "RequestsCPU",

"selector": "containers[] .resources .requests .cpu",

"reason": "Enforcing CPU requests aids a fair balancing of resources across the cluster",

"points": 1

},

{

"id": "RequestsMemory",

"selector": "containers[] .resources .requests .memory",

"reason": "Enforcing memory requests aids a fair balancing of resources across the cluster",

"points": 1

},

{

"id": "CapDropAny",

"selector": "containers[] .securityContext .capabilities .drop",

"reason": "Reducing kernel capabilities available to a container limits its attack surface",

"points": 1

},

{

"id": "CapDropAll",

"selector": "containers[] .securityContext .capabilities .drop | index(\"ALL\")",

"reason": "Drop all capabilities and add only those required to reduce syscall attack surface",

"points": 1

},

{

"id": "ReadOnlyRootFilesystem",

"selector": "containers[] .securityContext .readOnlyRootFilesystem == true",

"reason": "An immutable root filesystem can prevent malicious binaries being added to PATH and increase attack cost",

"points": 1

}

],

"advise": [

{

"id": "AutomountServiceAccountToken",

"selector": ".spec .automountServiceAccountToken == false",

"reason": "Disabling the automounting of Service Account Token reduces the attack surface of the API server",

"points": 1

},

{

"id": "RunAsGroup",

"selector": ".spec, .spec.containers[] | .securityContext .runAsGroup -gt 10000",

"reason": "Run as a high-UID group to avoid conflicts with the host's groups",

"points": 1

}

]

}

}

]

We ended up finding more improvements. We can observe that now it shows the items that passed, but we already have two more advises.

root@cks-master:~# vim nginx.yaml

root@cks-master:~# cat nginx.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx

name: nginx

annotations:

container.seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

container.apparmor.security.beta.kubernetes.io/nginx: 'runtime/default'

spec:

serviceAccountName: nginx

automountServiceAccountToken: false # Added

containers:

- image: nginx

name: nginx

resources:

requests:

memory: "128Mi"

cpu: "250m"

limits:

memory: "256Mi"

cpu: "500m"

securityContext:

runAsNonRoot: true

runAsUser: 10001

runAsGroup: 10001 # Added

readOnlyRootFilesystem: true

capabilities:

drop:

- ALL

appArmorProfile:

type: RuntimeDefault

volumeMounts:

- name: nginx-cache

mountPath: /var/cache/nginx

- name: nginx-config

mountPath: /etc/nginx/conf.d/default.conf

subPath: default.conf

- name: nginx-run

mountPath: /var/run/

volumes:

- name: nginx-cache

emptyDir: {}

- name: nginx-config

configMap:

name: nginx-config-map

- name: nginx-run

emptyDir: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

root@cks-master:~# kubesec scan nginx.yaml

[

{

"object": "Pod/nginx.default",

"valid": true,

"fileName": "nginx.yaml",

"message": "Passed with a score of 18 points",

"score": 18,

"scoring": {

"passed": [

{

"id": "ApparmorAny",

"selector": ".metadata .annotations .\"container.apparmor.security.beta.kubernetes.io/nginx\"",

"reason": "Well defined AppArmor policies may provide greater protection from unknown threats. WARNING: NOT PRODUCTION READY",

"points": 3

},

{

"id": "ServiceAccountName",

"selector": ".spec .serviceAccountName",

"reason": "Service accounts restrict Kubernetes API access and should be configured with least privilege",

"points": 3

},

{

"id": "SeccompAny",

"selector": ".metadata .annotations .\"container.seccomp.security.alpha.kubernetes.io/pod\"",

"reason": "Seccomp profiles set minimum privilege and secure against unknown threats",

"points": 1

},

{

"id": "AutomountServiceAccountToken",

"selector": ".spec .automountServiceAccountToken == false",

"reason": "Disabling the automounting of Service Account Token reduces the attack surface of the API server",

"points": 1

},

{

"id": "RunAsGroup",

"selector": ".spec, .spec.containers[] | .securityContext .runAsGroup -gt 10000",

"reason": "Run as a high-UID group to avoid conflicts with the host's groups",

"points": 1

},

{

"id": "RunAsNonRoot",

"selector": ".spec, .spec.containers[] | .securityContext .runAsNonRoot == true",

"reason": "Force the running image to run as a non-root user to ensure least privilege",

"points": 1

},

{

"id": "RunAsUser",

"selector": ".spec, .spec.containers[] | .securityContext .runAsUser -gt 10000",

"reason": "Run as a high-UID user to avoid conflicts with the host's users",

"points": 1

},

{

"id": "LimitsCPU",

"selector": "containers[] .resources .limits .cpu",

"reason": "Enforcing CPU limits prevents DOS via resource exhaustion",

"points": 1

},

{

"id": "LimitsMemory",

"selector": "containers[] .resources .limits .memory",

"reason": "Enforcing memory limits prevents DOS via resource exhaustion",

"points": 1

},

{

"id": "RequestsCPU",

"selector": "containers[] .resources .requests .cpu",

"reason": "Enforcing CPU requests aids a fair balancing of resources across the cluster",

"points": 1

},

{

"id": "RequestsMemory",

"selector": "containers[] .resources .requests .memory",

"reason": "Enforcing memory requests aids a fair balancing of resources across the cluster",

"points": 1

},

{

"id": "CapDropAny",

"selector": "containers[] .securityContext .capabilities .drop",

"reason": "Reducing kernel capabilities available to a container limits its attack surface",

"points": 1

},

{

"id": "CapDropAll",

"selector": "containers[] .securityContext .capabilities .drop | index(\"ALL\")",

"reason": "Drop all capabilities and add only those required to reduce syscall attack surface",

"points": 1

},

{

"id": "ReadOnlyRootFilesystem",

"selector": "containers[] .securityContext .readOnlyRootFilesystem == true",

"reason": "An immutable root filesystem can prevent malicious binaries being added to PATH and increase attack cost",

"points": 1

}

]

}

}

]

root@cks-master:~# k apply -f nginx.yaml

pod/nginx created

root@cks-master:~# k get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 3s